Content from Access epidemiological delay distributions

Last updated on 2026-02-09 | Edit this page

Overview

Questions

- How to get access to disease delay distributions from a pre-established database for use in analysis?

Objectives

- Get delays from a literature search database with epiparameter.

- Get distribution parameters and summary statistics of delay distributions.

Prerequisites

This episode requires you to be familiar with:

Data science : Basic programming with R.

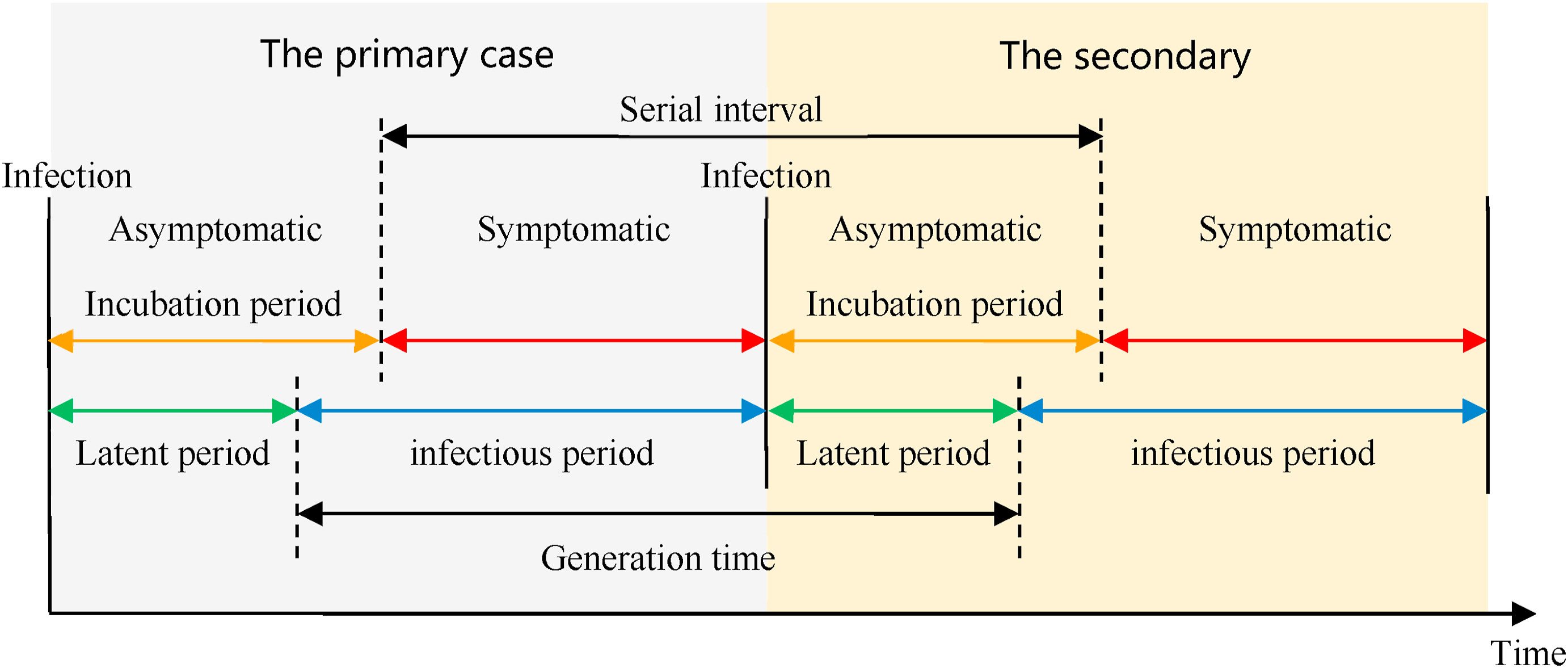

Epidemic theory : epidemiological parameters, disease time periods, such as the incubation period, generation time, and serial interval.

Introduction

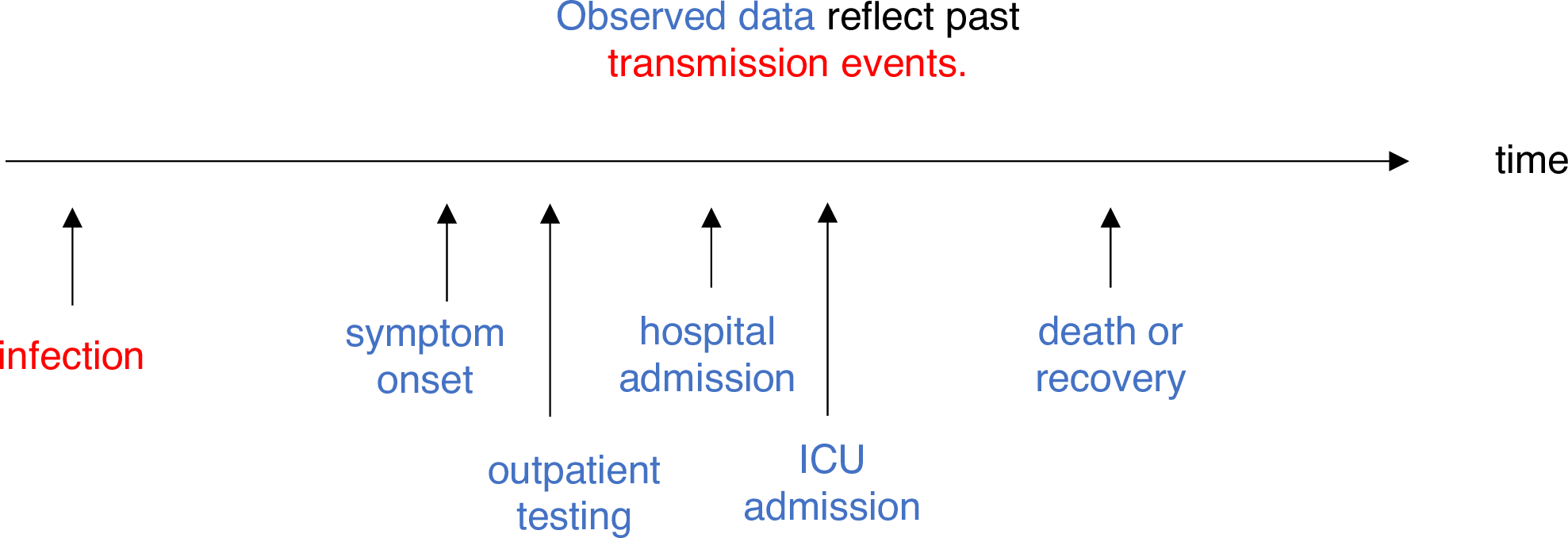

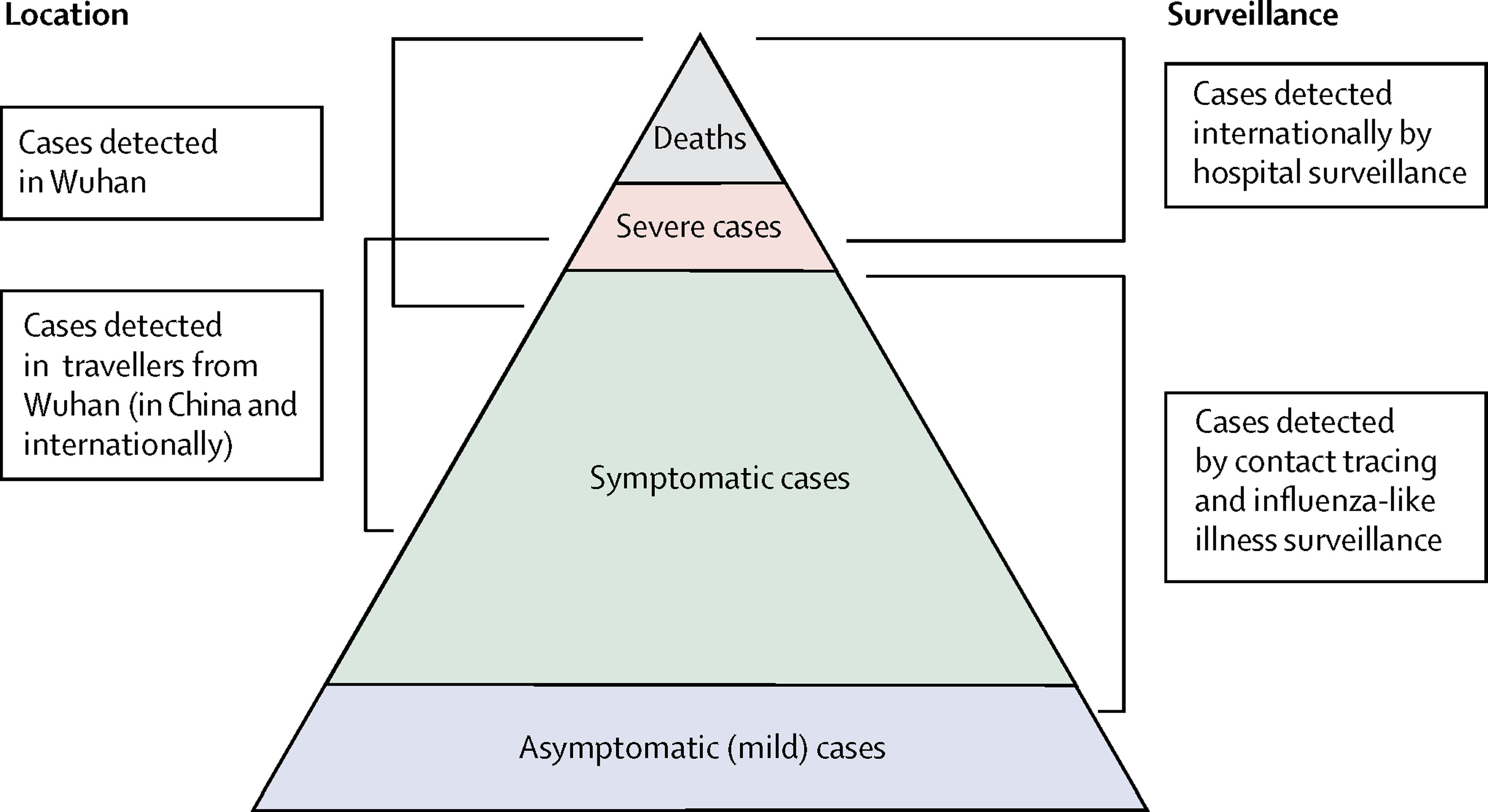

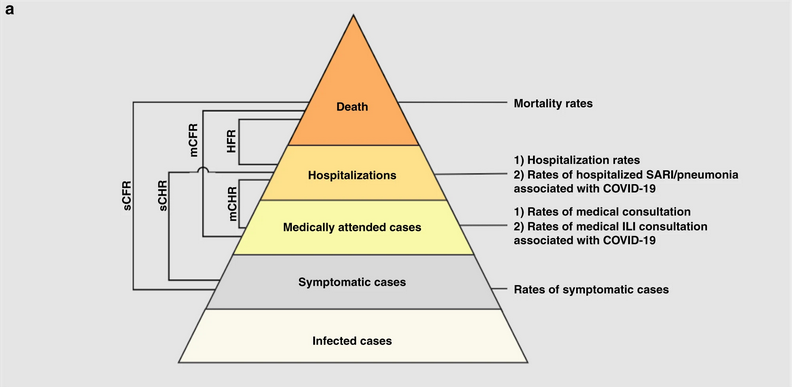

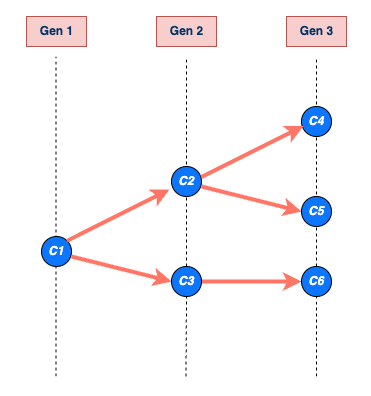

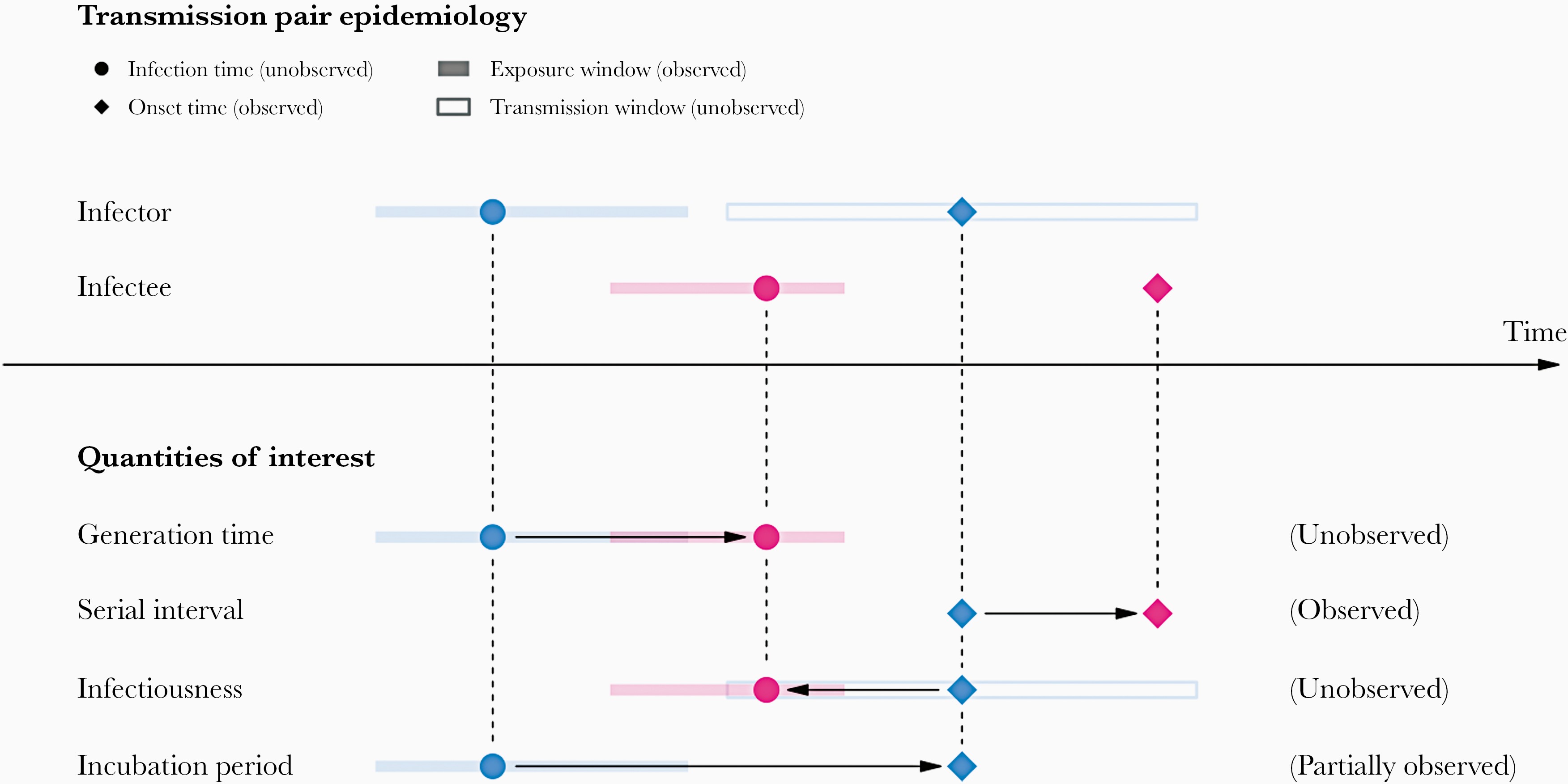

Infectious diseases follow an infection cycle, which usually includes the following phases: presymptomatic period, symptomatic period and recovery period, as described by their natural history. These time periods can be used to understand transmission dynamics and inform disease prevention and control interventions.

Definitions

Look at the glossary for the definitions of all the time periods of the figure above!

However, early in an epidemic, efforts to understand the epidemic and

implications for control can be delayed by the lack of an easy way to

access key parameters for the disease of interest (Nash et al., 2023).

Projects like epiparameter and {epireview}

are building online catalogues following literature synthesis protocols

that can help inform analysis and parametrise models by providing a

library of previously estimated epidemiological parameters from past

outbreaks.

To illustrate how to use the epiparameter R package in your analysis pipeline, our goal in this episode will be to access one specific set of epidemiological parameters from the literature, instead of extracting from papers and copying and pasting them by hand. We will then plug them into an EpiNow2 analysis workflow.

Let’s start loading the epiparameter package. We’ll

use the pipe %>% to connect some of its functions, some

tibble and dplyr functions, so let’s also

call to the tidyverse package:

R

library(epiparameter)

library(tidyverse)

The double-colon

The double-colon :: in R lets you call a specific

function from a package without loading the entire package into the

current environment.

For example, dplyr::filter(data, condition) uses

filter() from the dplyr package.

This helps us remember package functions and avoid namespace conflicts.

The problem

If we want to estimate the transmissibility of an infection, it’s

common to use a package such as EpiEstim or

EpiNow2. The EpiEstim package allows

real-time estimation of the reproduction number using case data over

time, reflecting how transmission changes based on when symptoms appear.

For estimating transmission based on when people were actually infected

(rather than symptom onset), the EpiNow2 package extends

this idea by combining it with a model that accounts for delays in

observed data. Both packages require some epidemiological information as

an input. For example, in EpiNow2 we use

EpiNow2::Gamma() to specify a generation time as a

probability distribution adding its mean, standard

deviation (sd), and maximum value (max).

To specify a generation_time that follows a

Gamma distribution with mean \(\mu =

4\), standard deviation \(\sigma =

2\), and a maximum value of 20, we write:

R

generation_time <-

EpiNow2::Gamma(

mean = 4,

sd = 2,

max = 20

)

It is a common practice for analysts to manually search the available literature and copy and paste the summary statistics or the distribution parameters from scientific publications. A challenge that is often faced is that the reporting of different statistical distributions is not consistent across the literature (e.g. a paper may only report the mean, rather than the full underlying distribution). epiparameter’s objective is to facilitate the access to reliable estimates of distribution parameters for a range of infectious diseases, so that they can easily be implemented in outbreak analytic pipelines.

In this episode, we will access the summary statistics of generation time for COVID-19 from the library of epidemiological parameters provided by epiparameter. These metrics can be used to estimate the transmissibility of this disease using EpiNow2 in subsequent episodes.

Let’s start by looking at how many entries are currently available in

the epidemiological distributions database in

epiparameter using epiparameter_db() for the

epidemiological distribution epi_name called generation

time with the string "generation":

R

epiparameter::epiparameter_db(

epi_name = "generation"

)

OUTPUT

Returning 3 results that match the criteria (2 are parameterised).

Use subset to filter by entry variables or single_epiparameter to return a single entry.

To retrieve the citation for each use the 'get_citation' functionOUTPUT

# List of 3 <epiparameter> objects

Number of diseases: 2

❯ Chikungunya ❯ Influenza

Number of epi parameters: 1

❯ generation time

[[1]]

Disease: Influenza

Pathogen: Influenza-A-H1N1

Epi Parameter: generation time

Study: Lessler J, Reich N, Cummings D, New York City Department of Health and

Mental Hygiene Swine Influenza Investigation Team (2009). "Outbreak of

2009 Pandemic Influenza A (H1N1) at a New York City School." _The New

England Journal of Medicine_. doi:10.1056/NEJMoa0906089

<https://doi.org/10.1056/NEJMoa0906089>.

Distribution: weibull (days)

Parameters:

shape: 2.360

scale: 3.180

[[2]]

Disease: Chikungunya

Pathogen: Chikungunya Virus

Epi Parameter: generation time

Study: Salje H, Cauchemez S, Alera M, Rodriguez-Barraquer I, Thaisomboonsuk B,

Srikiatkhachorn A, Lago C, Villa D, Klungthong C, Tac-An I, Fernandez

S, Velasco J, Roque Jr V, Nisalak A, Macareo L, Levy J, Cummings D,

Yoon I (2015). "Reconstruction of 60 Years of Chikungunya Epidemiology

in the Philippines Demonstrates Episodic and Focal Transmission." _The

Journal of Infectious Diseases_. doi:10.1093/infdis/jiv470

<https://doi.org/10.1093/infdis/jiv470>.

Parameters: <no parameters>

Mean: 14 (days)

[[3]]

Disease: Chikungunya

Pathogen: Chikungunya Virus

Epi Parameter: generation time

Study: Guzzetta G, Vairo F, Mammone A, Lanini S, Poletti P, Manica M, Rosa R,

Caputo B, Solimini A, della Torre A, Scognamiglio P, Zumla A, Ippolito

G, Merler S (2020). "Spatial modes for transmission of chikungunya

virus during a large chikungunya outbreak in Italy: a modeling

analysis." _BMC Medicine_. doi:10.1186/s12916-020-01674-y

<https://doi.org/10.1186/s12916-020-01674-y>.

Distribution: gamma (days)

Parameters:

shape: 8.633

scale: 1.447

# ℹ Use `parameter_tbl()` to see a summary table of the parameters.

# ℹ Explore database online at: https://epiverse-trace.github.io/epiparameter/articles/database.htmlIn the library of epidemiological parameters, we may not have a

"generation" time entry for our disease of interest.

Instead, we can look at the serial intervals for COVID-19.

Let’s find what we need to consider for this!

Systematic review data for priority pathogens

The {epireview} R

package has parameters on Ebola, Marburg and Lassa from recent

systematic reviews, with more priority pathogens planned for future

releases. Have a look at this

vignette for more information on how to use these parameters with

epiparameter.

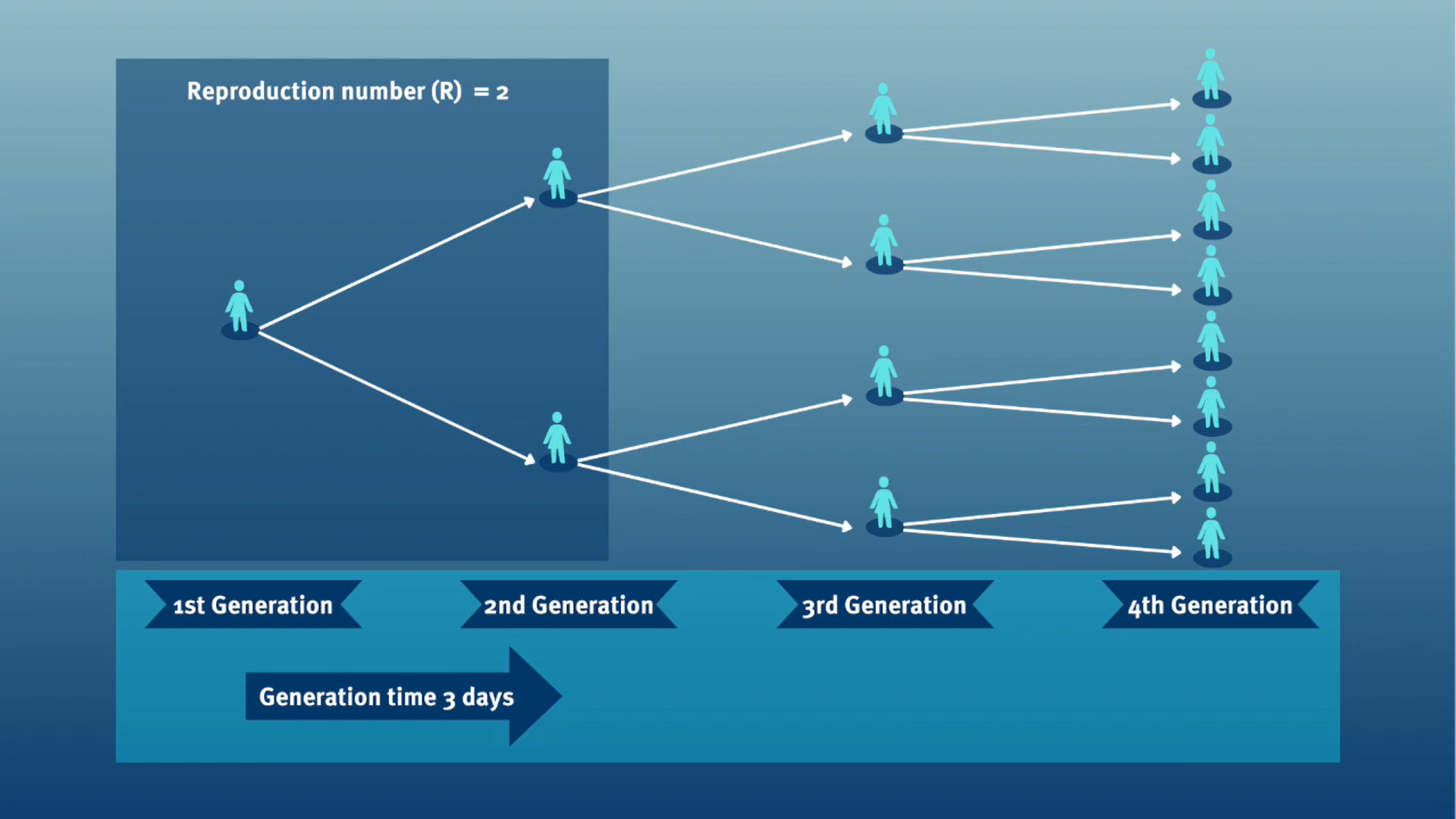

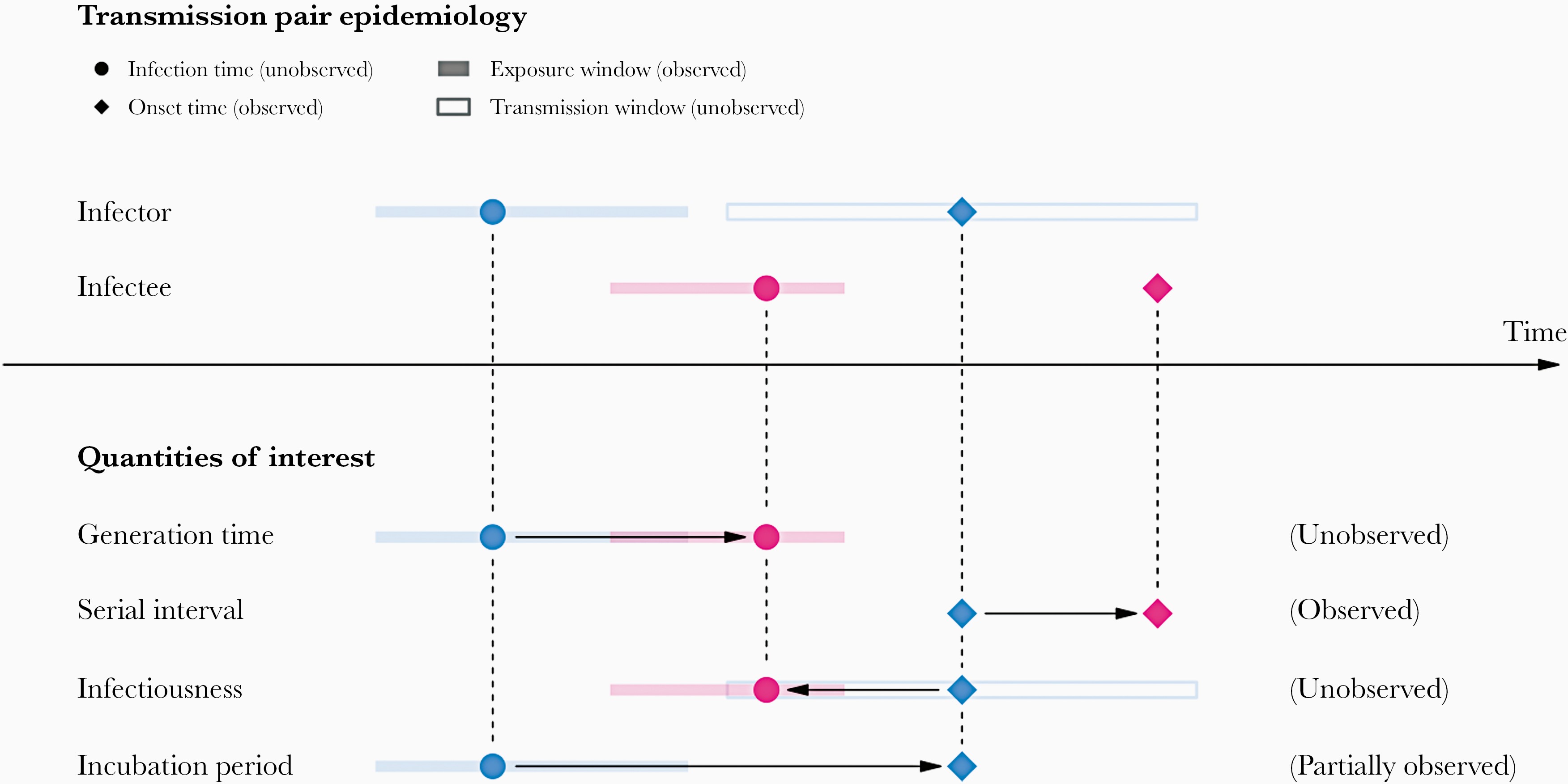

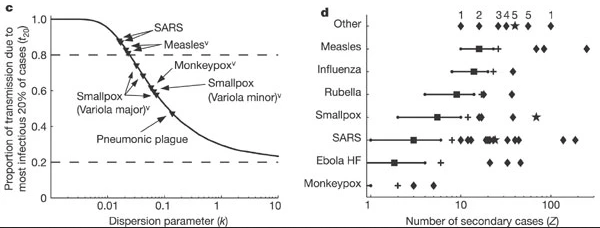

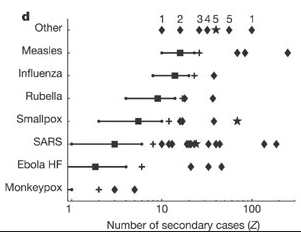

Generation time vs serial interval

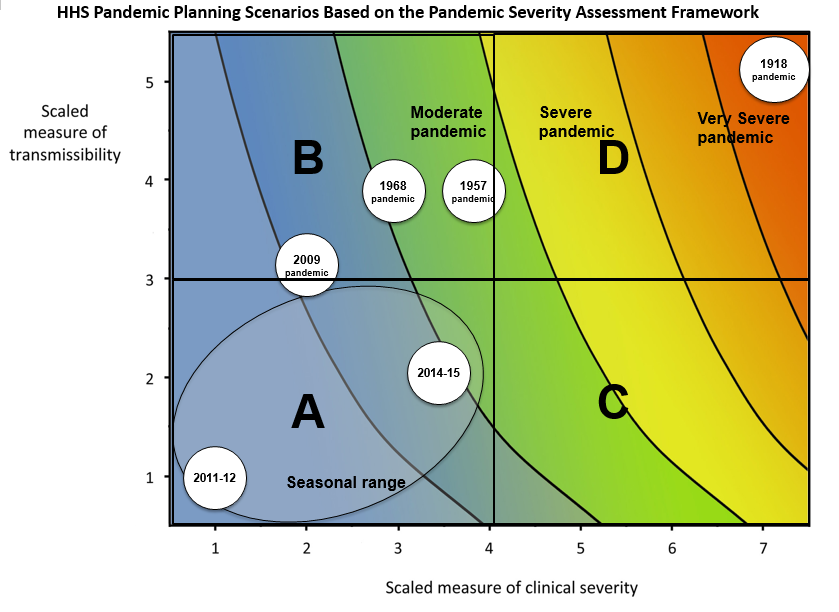

The generation time, jointly with the reproduction number (\(R\)), can provide valuable insights into the likely growth rate of the epidemic, and hence inform the implementation of control measures. The larger the value of \(R\) and/or the shorter the generation time, the more new infections that we would expect per day, and hence the faster the incidence of disease cases will grow.

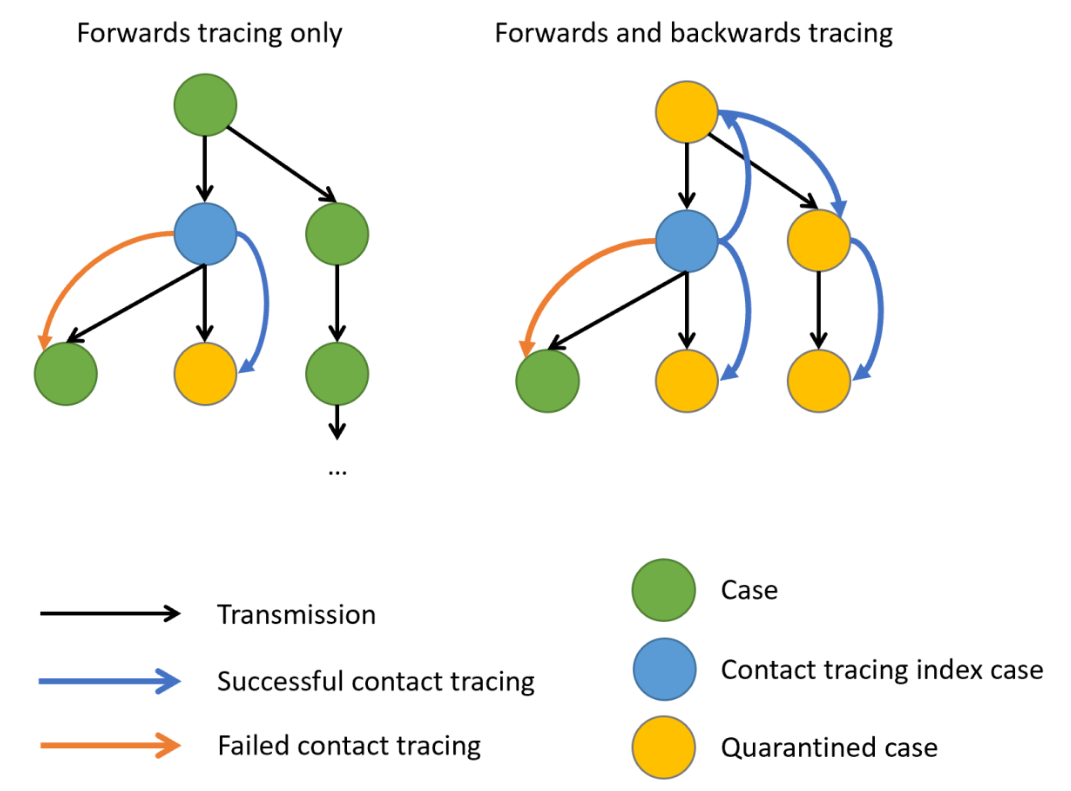

In calculating the effective reproduction number (\(R_{t}\)), the generation time distribution (i.e. delay from one infection to the next) is often approximated by the serial interval distribution (i.e. delay from onset of symptoms in the infector to onset in the infectee). This approximation is frequently used because it is easier to observe and record the onset of symptoms than the exact time of infection.

However, using the serial interval as an approximation of the generation time is most appropriate for diseases in which infectiousness starts after symptom onset (Chung Lau et al., 2021). In cases where infectiousness starts before symptom onset, the serial intervals can have negative values, which occurs when the infectee develops symptoms before the infector in a transmission pair (Nishiura et al., 2020).

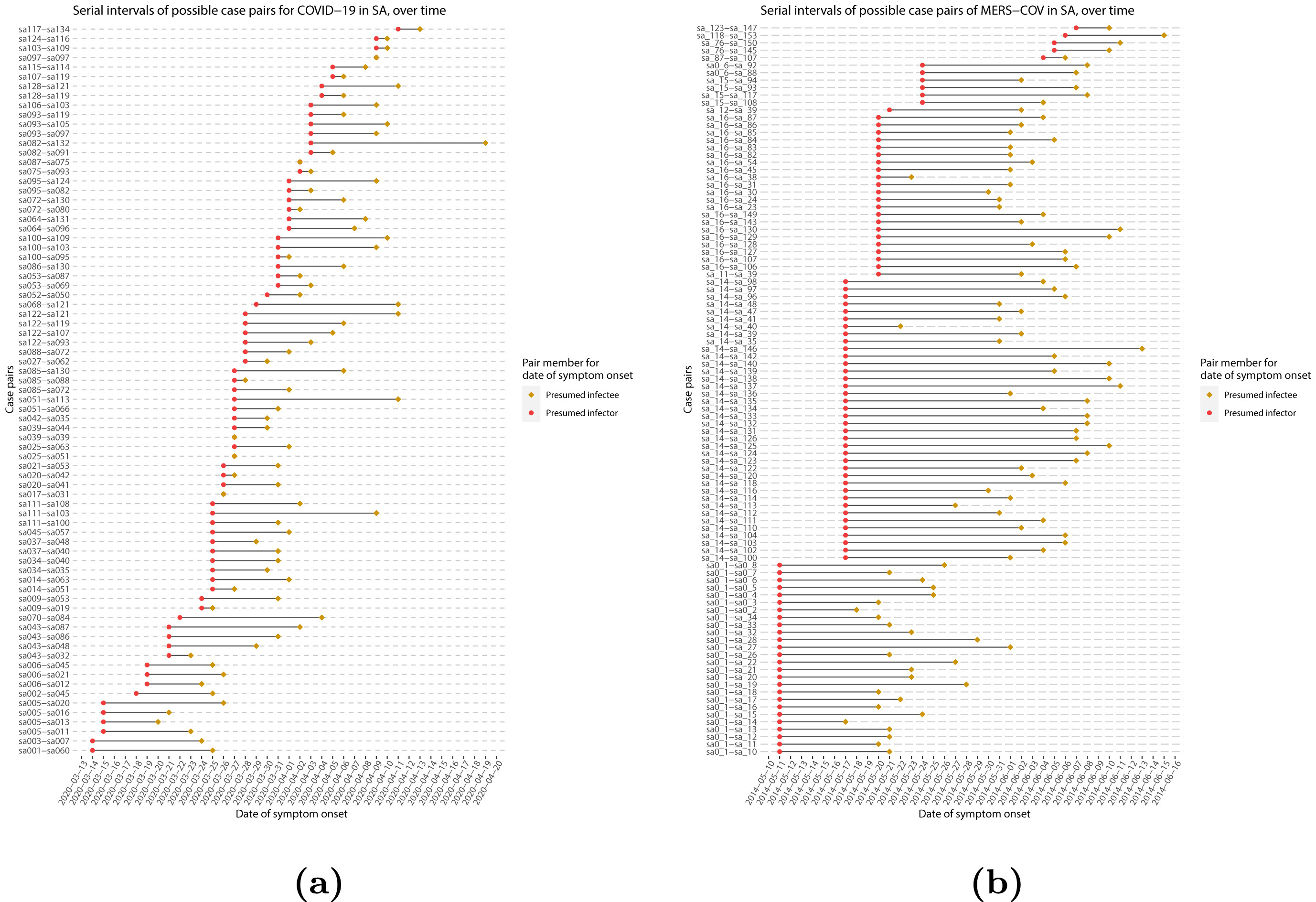

From mean delays to probability distributions

If we measure the serial interval in real data, we typically see that not all case pairs have the same delay from onset-to-onset. We can observe this variability for other key epidemiological delays as well, including the incubation period and infectious period.

To summarise these data from individual and pair time periods, it is therefore useful to quantify the statistical distribution of delays that best fits the data, rather than just focusing on the mean (McFarland et al., 2023).

Statistical distributions are summarised in terms of their summary statistics like the location (mean and percentiles) and spread (variance or standard deviation) of the distribution, or with their distribution parameters that inform about the form (shape and rate/scale) of the distribution. These estimated values can be reported with their uncertainty (95% confidence intervals).

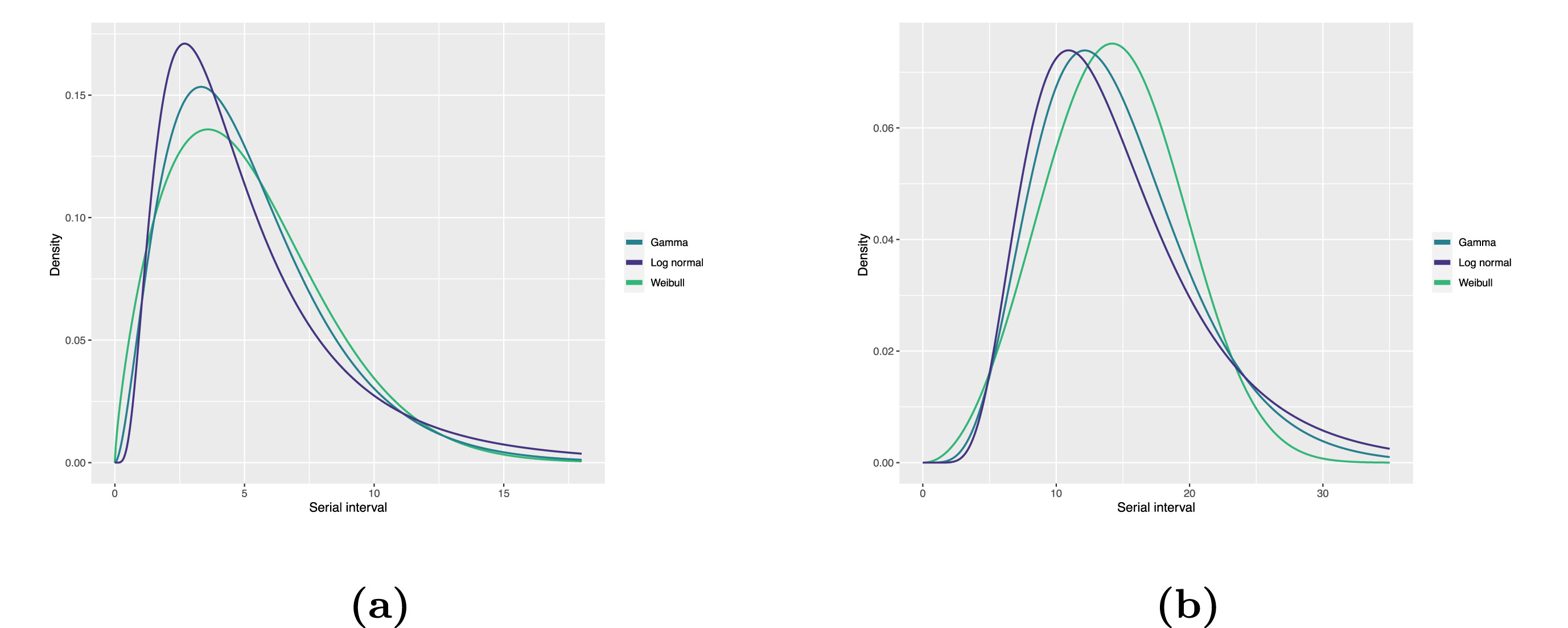

| Gamma | mean | shape | rate/scale |

|---|---|---|---|

| MERS-CoV | 14.13(13.9–14.7) | 6.31(4.88–8.52) | 0.43(0.33–0.60) |

| COVID-19 | 5.1(5.0–5.5) | 2.77(2.09–3.88) | 0.53(0.38–0.76) |

| Weibull | mean | shape | rate/scale |

|---|---|---|---|

| MERS-CoV | 14.2(13.3–15.2) | 3.07(2.64–3.63) | 16.1(15.0–17.1) |

| COVID-19 | 5.2(4.6–5.9) | 1.74(1.46–2.11) | 5.83(5.08–6.67) |

| Log normal | mean | mean-log | sd-log |

|---|---|---|---|

| MERS-CoV | 14.08(13.1–15.2) | 2.58(2.50–2.68) | 0.44(0.39–0.5) |

| COVID-19 | 5.2(4.2–6.5) | 1.45(1.31–1.61) | 0.63(0.54–0.74) |

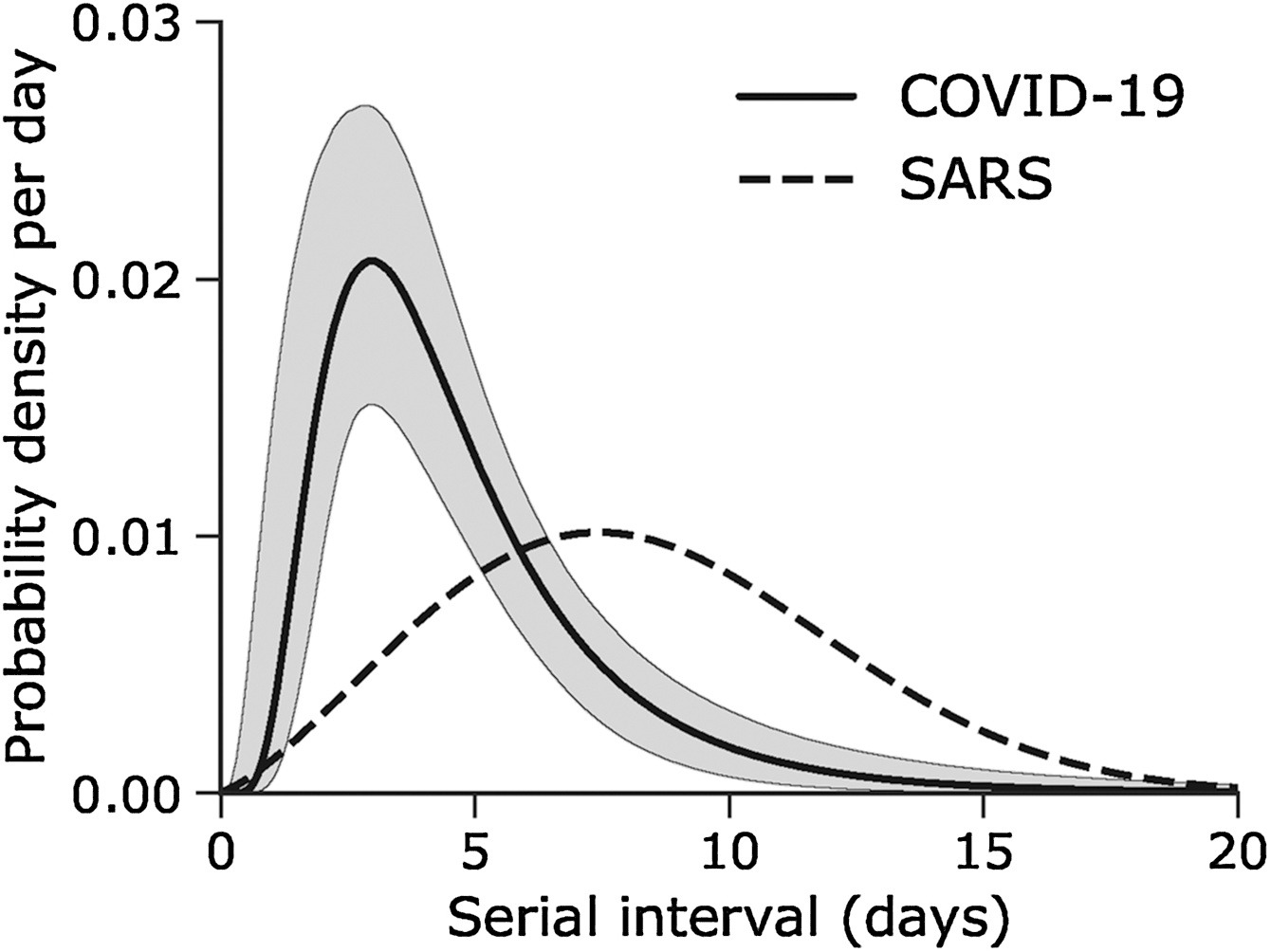

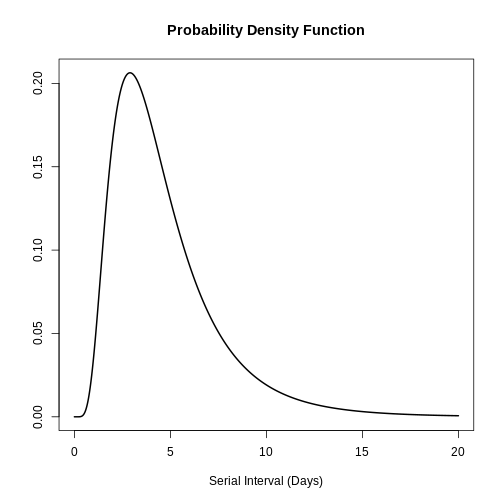

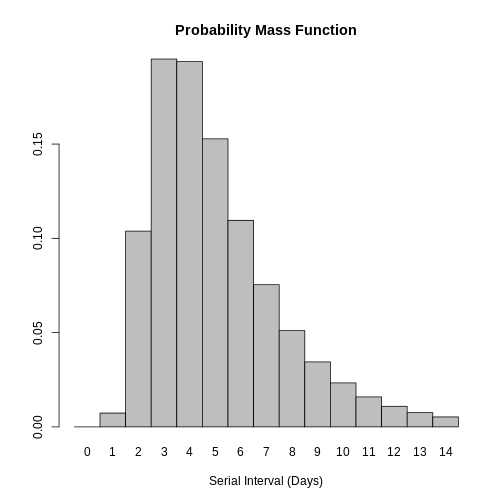

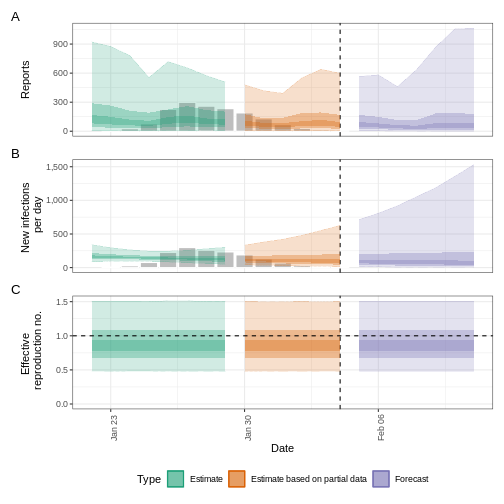

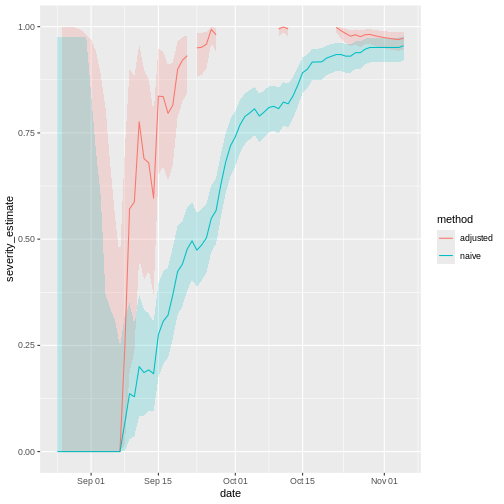

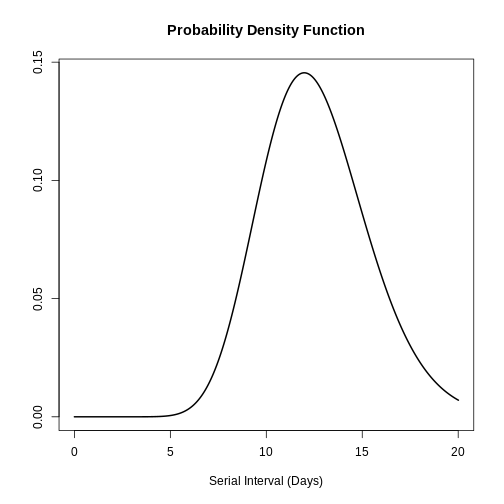

Serial interval

Assume that COVID-19 and SARS have similar reproduction number values and that the serial interval approximates the generation time.

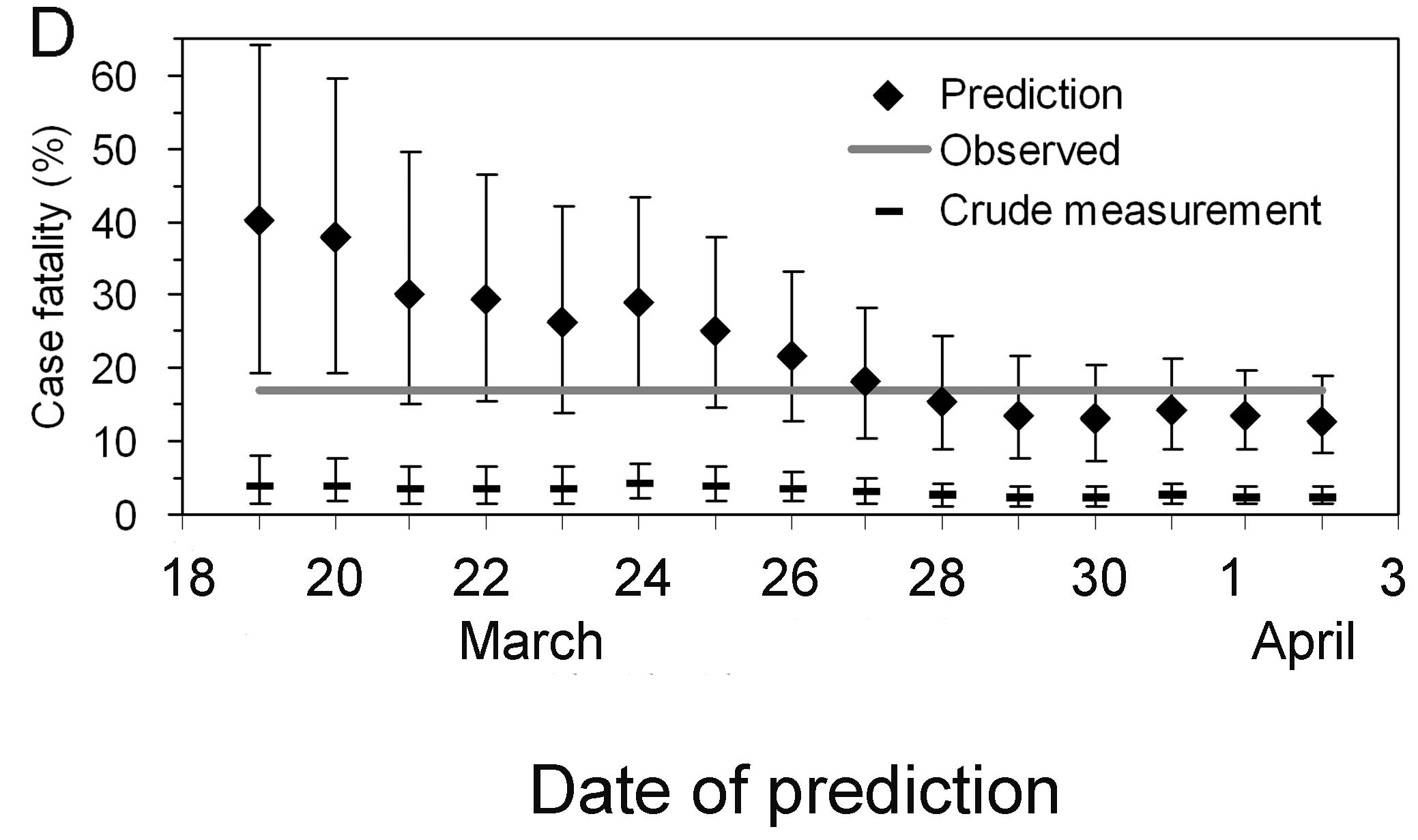

Given the serial interval of both infections in the figure below:

- Which one would be harder to control?

- Why do you conclude that?

The peak of each curve can inform you about the location of the mean of each distribution. A larger mean indicates a longer expected delay between symptom onset in the infector and infectee.

Which one would be harder to control?

COVID-19

Why do you conclude that?

COVID-19 has a lower mean serial interval. The approximate mean value for the serial interval of COVID-19 is around four days, and SARS is about seven days. Thus, if there are many infections in the population, COVID-19 will on average have more new generations of infection in less time than SARS would, assuming similar reproduction numbers. This means far more resources would be required to keep up with the epidemic.

Choosing epidemiological parameters

In this section, we will use epiparameter to obtain the serial interval for COVID-19, as an alternative to the generation time.

First, let’s see how many parameters we have in the epidemiological

distributions database (epiparameter_db()) with the

disease named covid-19. Run this code:

R

epiparameter::epiparameter_db(

disease = "covid"

)

From the epiparameter package, we can use the

epiparameter_db() function to ask for any

disease and also for a specific epidemiological

distribution (epi_name). Run this in your console:

R

epiparameter::epiparameter_db(

disease = "COVID",

epi_name = "serial"

)

With this query combination, we get more than one delay distribution

(because the database has multiple entries). This output is an

<epiparameter> class object.

CASE-INSENSITIVE

epiparameter_db is case-insensitive.

This means that you can use strings with letters in upper or lower case

indistinctly. Strings like "serial",

"serial interval" or "serial_interval" are

also valid.

As suggested in the outputs, to summarise an

<epiparameter> object and get the column names from

the underlying parameter database, we can add the

epiparameter::parameter_tbl() function to the previous code

using the pipe %>%:

R

epiparameter::epiparameter_db(

disease = "covid",

epi_name = "serial"

) %>%

epiparameter::parameter_tbl()

OUTPUT

Returning 4 results that match the criteria (3 are parameterised).

Use subset to filter by entry variables or single_epiparameter to return a single entry.

To retrieve the citation for each use the 'get_citation' functionOUTPUT

# Parameter table:

# A data frame: 4 × 7

disease pathogen epi_name prob_distribution author year sample_size

<chr> <chr> <chr> <chr> <chr> <dbl> <dbl>

1 COVID-19 SARS-CoV-2 serial interval <NA> Alene… 2021 3924

2 COVID-19 SARS-CoV-2 serial interval lnorm Nishi… 2020 28

3 COVID-19 SARS-CoV-2 serial interval weibull Nishi… 2020 18

4 COVID-19 SARS-CoV-2 serial interval norm Yang … 2020 131In the epiparameter::parameter_tbl() output, we can also

find different types of probability distributions (e.g., Log-normal,

Weibull, Normal).

epiparameter uses the base R naming

convention for distributions. This is why Log normal is

called lnorm.

Entries with a missing value (<NA>) in the

prob_distribution column are non-parameterised

entries. They have summary statistics (e.g. a mean and standard

deviation) but no probability distribution specified. Compare these two

outputs:

R

# get an <epiparameter> object

distribution <-

epiparameter::epiparameter_db(

disease = "covid",

epi_name = "serial"

)

distribution %>%

# pluck the first entry in the object class <list>

pluck(1) %>%

# check if <epiparameter> object have distribution parameters

is_parameterised()

# check if the second <epiparameter> object

# have distribution parameters

distribution %>%

pluck(2) %>%

is_parameterised()

Parameterised entries have an Inference method

As detailed in ?is_parameterised, a parameterised

distribution is the entry that has a probability distribution associated

with it provided by an inference_method as shown in

metadata:

R

distribution[[1]]$metadata$inference_method

distribution[[2]]$metadata$inference_method

distribution[[4]]$metadata$inference_method

Find your delay distributions!

Take 2 minutes to explore the epiparameter library.

Choose a disease of interest (e.g., influenza, measles, etc.) and a delay distribution (e.g., the incubation period, onset to death, etc.).

Find:

How many delay distributions are for that disease?

How many types of probability distribution (e.g., gamma, log normal) are for a given delay in that disease?

Ask:

Do you recognise the papers?

Should epiparameter literature review consider any other paper?

The epiparameter_db() function with disease

alone counts the number of entries like:

- studies, and

- delay distributions.

The epiparameter_db() function with disease

and epi_name gets a list of all entries with:

- the complete citation,

- the type of a probability distribution, and

- distribution parameter values.

The combo of epiparameter_db() plus

parameter_tbl() gets a data frame of all entries with

columns like:

- the type of the probability distribution per delay, and

- author and year of the study.

We choose to explore Ebola’s delay distributions:

R

# we expect 16 delay distributions for Ebola

epiparameter::epiparameter_db(

disease = "ebola"

)

OUTPUT

Returning 17 results that match the criteria (17 are parameterised).

Use subset to filter by entry variables or single_epiparameter to return a single entry.

To retrieve the citation for each use the 'get_citation' functionOUTPUT

# List of 17 <epiparameter> objects

Number of diseases: 1

❯ Ebola Virus Disease

Number of epi parameters: 9

❯ hospitalisation to death ❯ hospitalisation to discharge ❯ incubation period ❯ notification to death ❯ notification to discharge ❯ offspring distribution ❯ onset to death ❯ onset to discharge ❯ serial interval

[[1]]

Disease: Ebola Virus Disease

Pathogen: Ebola Virus

Epi Parameter: offspring distribution

Study: Lloyd-Smith J, Schreiber S, Kopp P, Getz W (2005). "Superspreading and

the effect of individual variation on disease emergence." _Nature_.

doi:10.1038/nature04153 <https://doi.org/10.1038/nature04153>.

Distribution: nbinom (No units)

Parameters:

mean: 1.500

dispersion: 5.100

[[2]]

Disease: Ebola Virus Disease

Pathogen: Ebola Virus-Zaire Subtype

Epi Parameter: incubation period

Study: Eichner M, Dowell S, Firese N (2011). "Incubation period of ebola

hemorrhagic virus subtype zaire." _Osong Public Health and Research

Perspectives_. doi:10.1016/j.phrp.2011.04.001

<https://doi.org/10.1016/j.phrp.2011.04.001>.

Distribution: lnorm (days)

Parameters:

meanlog: 2.487

sdlog: 0.330

[[3]]

Disease: Ebola Virus Disease

Pathogen: Ebola Virus-Zaire Subtype

Epi Parameter: onset to death

Study: The Ebola Outbreak Epidemiology Team, Barry A, Ahuka-Mundeke S, Ali

Ahmed Y, Allarangar Y, Anoko J, Archer B, Abedi A, Bagaria J, Belizaire

M, Bhatia S, Bokenge T, Bruni E, Cori A, Dabire E, Diallo A, Diallo B,

Donnelly C, Dorigatti I, Dorji T, Waeber A, Fall I, Ferguson N,

FitzJohn R, Tengomo G, Formenty P, Forna A, Fortin A, Garske T,

Gaythorpe K, Gurry C, Hamblion E, Djingarey M, Haskew C, Hugonnet S,

Imai N, Impouma B, Kabongo G, Kalenga O, Kibangou E, Lee T, Lukoya C,

Ly O, Makiala-Mandanda S, Mamba A, Mbala-Kingebeni P, Mboussou F,

Mlanda T, Makuma V, Morgan O, Mulumba A, Kakoni P, Mukadi-Bamuleka D,

Muyembe J, Bathé N, Ndumbi Ngamala P, Ngom R, Ngoy G, Nouvellet P, Nsio

J, Ousman K, Peron E, Polonsky J, Ryan M, Touré A, Towner R, Tshapenda

G, Van De Weerdt R, Van Kerkhove M, Wendland A, Yao N, Yoti Z, Yuma E,

Kalambayi Kabamba G, Mwati J, Mbuy G, Lubula L, Mutombo A, Mavila O,

Lay Y, Kitenge E (2018). "Outbreak of Ebola virus disease in the

Democratic Republic of the Congo, April–May, 2018: an epidemiological

study." _The Lancet_. doi:10.1016/S0140-6736(18)31387-4

<https://doi.org/10.1016/S0140-6736%2818%2931387-4>.

Distribution: gamma (days)

Parameters:

shape: 2.400

scale: 3.333

# ℹ 14 more elements

# ℹ Use `print(n = ...)` to see more elements.

# ℹ Use `parameter_tbl()` to see a summary table of the parameters.

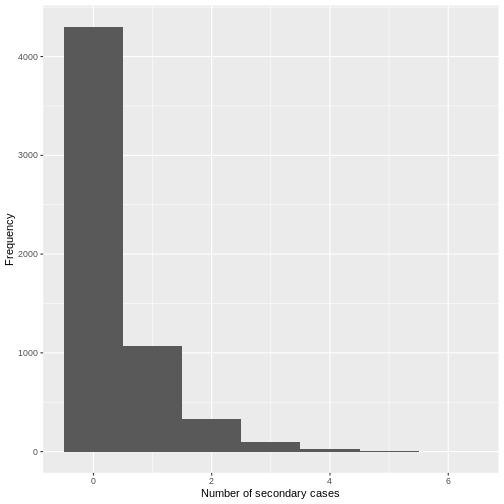

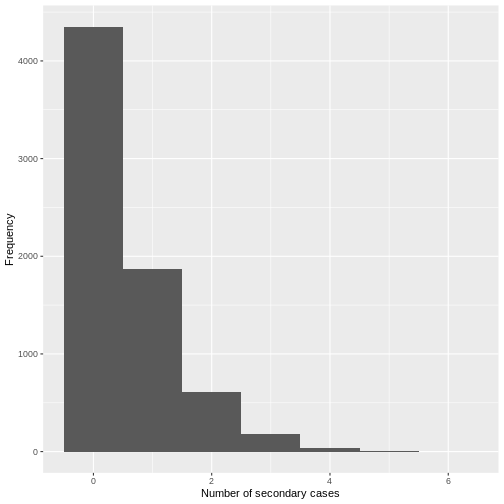

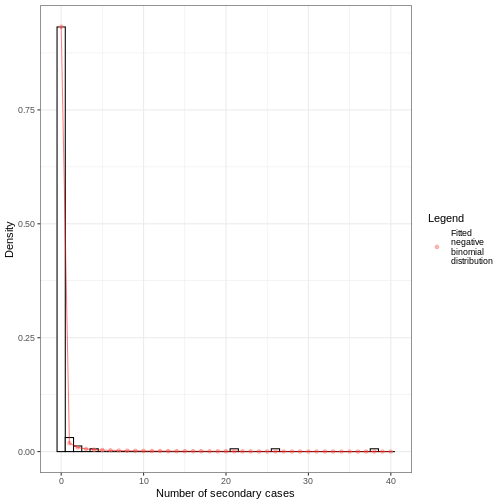

# ℹ Explore database online at: https://epiverse-trace.github.io/epiparameter/articles/database.htmlNow, from the output of epiparameter::epiparameter_db(),

What is an offspring

distribution?

We choose to find Ebola’s incubation periods. This output lists all the papers and parameters found. Run this locally if needed:

R

epiparameter::epiparameter_db(

disease = "ebola",

epi_name = "incubation"

)

We use parameter_tbl() to get a summary display of

all:

R

# we expect 2 different types of delay distributions

# for ebola incubation period

epiparameter::epiparameter_db(

disease = "ebola",

epi_name = "incubation"

) %>%

parameter_tbl()

OUTPUT

Returning 5 results that match the criteria (5 are parameterised).

Use subset to filter by entry variables or single_epiparameter to return a single entry.

To retrieve the citation for each use the 'get_citation' functionOUTPUT

# Parameter table:

# A data frame: 5 × 7

disease pathogen epi_name prob_distribution author year sample_size

<chr> <chr> <chr> <chr> <chr> <dbl> <dbl>

1 Ebola Virus Dise… Ebola V… incubat… lnorm Eichn… 2011 196

2 Ebola Virus Dise… Ebola V… incubat… gamma WHO E… 2015 1798

3 Ebola Virus Dise… Ebola V… incubat… gamma WHO E… 2015 49

4 Ebola Virus Dise… Ebola V… incubat… gamma WHO E… 2015 957

5 Ebola Virus Dise… Ebola V… incubat… gamma WHO E… 2015 792We find two types of probability distributions for this query: log normal and gamma.

How does epiparameter do the collection and review of peer-reviewed literature? We invite you to read the vignette on “Data Collation and Synthesis Protocol”!

Select a single distribution

The epiparameter::epiparameter_db() function works as a

filtering or subset function. We can use the author

argument to keep Hiroshi Nishiura parameters, or the

subset argument to keep parameters from studies with a

sample size higher than 10:

R

epiparameter::epiparameter_db(

disease = "covid",

epi_name = "serial",

author = "Nishiura",

subset = sample_size > 10

) %>%

epiparameter::parameter_tbl()

We still get more than one epidemiological parameter. Instead, we can

set the single_epiparameter argument to TRUE

for only one:

R

epiparameter::epiparameter_db(

disease = "covid",

epi_name = "serial",

single_epiparameter = TRUE

)

OUTPUT

Using Nishiura H, Linton N, Akhmetzhanov A (2020). "Serial interval of novel

coronavirus (COVID-19) infections." _International Journal of

Infectious Diseases_. doi:10.1016/j.ijid.2020.02.060

<https://doi.org/10.1016/j.ijid.2020.02.060>..

To retrieve the citation use the 'get_citation' functionOUTPUT

Disease: COVID-19

Pathogen: SARS-CoV-2

Epi Parameter: serial interval

Study: Nishiura H, Linton N, Akhmetzhanov A (2020). "Serial interval of novel

coronavirus (COVID-19) infections." _International Journal of

Infectious Diseases_. doi:10.1016/j.ijid.2020.02.060

<https://doi.org/10.1016/j.ijid.2020.02.060>.

Distribution: lnorm (days)

Parameters:

meanlog: 1.386

sdlog: 0.568How does ‘single_epiparameter’ work?

Looking at the help documentation for

?epiparameter::epiparameter_db():

- If multiple entries match the arguments supplied and

single_epiparameter = TRUE, then the parameterised<epiparameter>with the largest sample size will be returned. - If multiple entries are equal after this sorting, the first entry in the list will be returned.

What is a parametrised <epiparameter>?

Look at ?is_parameterised.

Let’s assign this <epiparameter> class object to

the covid_serialint object.

R

covid_serialint <-

epiparameter::epiparameter_db(

disease = "covid",

epi_name = "serial",

single_epiparameter = TRUE

)

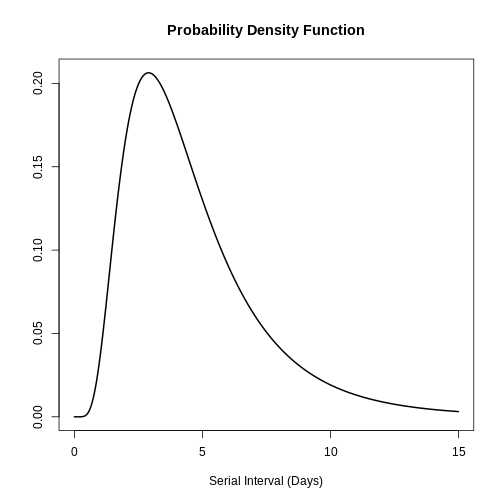

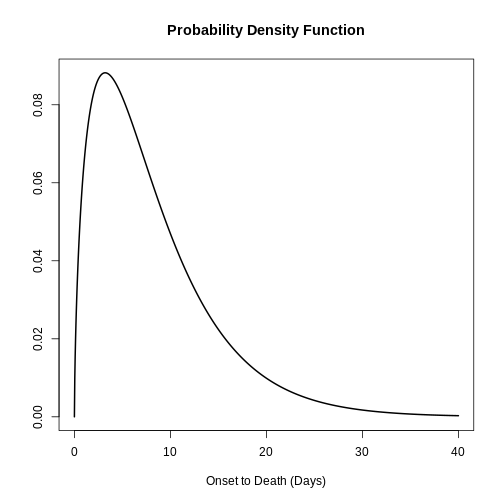

You can use plot() to <epiparameter>

objects to visualise:

- the Probability Density Function (PDF) and

- the Cumulative Distribution Function (CDF).

R

# plot <epiparameter> object

plot(covid_serialint)

With the xlim argument, you can change the length or

number of days in the x axis. Explore what this looks

like:

R

# plot <epiparameter> object

plot(covid_serialint, xlim = c(1, 60))

Extract the summary statistics

We can get the mean and standard deviation

(sd) from this <epiparameter> diving

into the summary_stats object:

R

# get the mean

covid_serialint$summary_stats$mean

OUTPUT

[1] 4.7Now, we have an epidemiological parameter we can reuse! Given that

the covid_serialint is a lnorm or log normal

distribution, we can replace the summary statistics

numbers we plug into the EpiNow2::LogNormal() function:

R

generation_time <-

EpiNow2::LogNormal(

mean = covid_serialint$summary_stats$mean, # replaced!

sd = covid_serialint$summary_stats$sd, # replaced!

max = 20

)

In the next episode we’ll learn how to use EpiNow2 to

correctly specify distributions, estimate transmissibility. Then, how to

use distribution functions to get a maximum value

(max) for EpiNow2::LogNormal() and use

epiparameter in your analysis.

Log normal distributions

If you need the log normal distribution parameters

instead of the summary statistics, we can use

epiparameter::get_parameters():

R

covid_serialint_parameters <-

epiparameter::get_parameters(covid_serialint)

covid_serialint_parameters

OUTPUT

meanlog sdlog

1.3862617 0.5679803 This gets a vector of class <numeric> ready to use

as input for any other package!

Consider that {EpiNow2} functions also accept distribution parameters

as inputs. Run ?EpiNow2::LogNormal to read the Probability

distributions reference manual.

Challenges

Ebola’s serial interval

Take 1 minute to:

Get access to the Ebola serial interval with the highest sample size.

Answer:

What is the

sdof the epidemiological distribution?What is the

sample_sizeused in that study?

Ebola’s severity parameter

A severity parameter like the duration of hospitalisation could add to the information needed about the bed capacity in response to an outbreak (Cori et al., 2017).

For Ebola:

- What is the reported point estimate of the mean duration of health care and case isolation?

An informative delay should measure the time from symptom onset to recovery or death.

Find a way to access the whole epiparameter database

and find how that delay may be stored. The parameter_tbl()

output is a dataframe.

R

# one way to get the list of all the available parameters

epiparameter_db(disease = "all") %>%

parameter_tbl() %>%

as_tibble() %>%

distinct(epi_name)

OUTPUT

Returning 125 results that match the criteria (100 are parameterised).

Use subset to filter by entry variables or single_epiparameter to return a single entry.

To retrieve the citation for each use the 'get_citation' functionOUTPUT

# A tibble: 13 × 1

epi_name

<chr>

1 incubation period

2 serial interval

3 generation time

4 onset to death

5 offspring distribution

6 hospitalisation to death

7 hospitalisation to discharge

8 notification to death

9 notification to discharge

10 onset to discharge

11 onset to hospitalisation

12 onset to ventilation

13 case fatality risk R

ebola_severity <- epiparameter_db(

disease = "ebola",

epi_name = "onset to discharge"

)

OUTPUT

Returning 1 results that match the criteria (1 are parameterised).

Use subset to filter by entry variables or single_epiparameter to return a single entry.

To retrieve the citation for each use the 'get_citation' functionR

# point estimate

ebola_severity$summary_stats$mean

OUTPUT

[1] 15.1Check that for some epiparameter entries you will also have the uncertainty around the point estimate of each summary statistic:

R

# 95% confidence intervals

ebola_severity$summary_stats$mean_ci

OUTPUT

[1] 95R

# limits of the confidence intervals

ebola_severity$summary_stats$mean_ci_limits

OUTPUT

[1] 14.6 15.6The distribution zoo

Explore this shinyapp called The Distribution Zoo!

Follow these steps to reproduce the form of the COVID serial interval

distribution from epiparameter

(covid_serialint object):

- Access the https://ben18785.shinyapps.io/distribution-zoo/ shiny app website,

- Go to the left panel,

- Keep the Category of distribution:

Continuous Univariate, - Select a new Type of distribution:

Log-Normal, - Move the sliders, i.e. the graphical control

element that allows you to adjust a value by moving a handle along a

horizontal track or bar to the

covid_serialintparameters.

Replicate these with the distribution object and all its

list elements: [[2]], [[3]], and

[[4]]. Explore how the shape of a distribution changes when

its parameters change.

Share about:

- What other features of the website do you find helpful?

Key Points

- Use epiparameter to access the literature catalogue of epidemiological delay distributions.

- Use

epiparameter_db()to select single delay distributions. - Use

parameter_tbl()for an overview of multiple delay distributions. - Reuse known estimates for unknown disease in the early stage of an outbreak when no contact tracing data is available.

Content from Quantifying transmission

Last updated on 2026-02-11 | Edit this page

Overview

Questions

- How can I estimate the time-varying reproduction number (\(Rt\)) and growth rate from a time series of case data?

- How can I quantify geographical heterogeneity from these transmission metrics?

Objectives

- Learn how to estimate transmission metrics from a time series of

case data using the R package

EpiNow2

Prerequisites

Learners should familiarise themselves with following concepts before working through this tutorial:

Statistics: probability distributions, principle of Bayesian analysis.

Epidemic theory: Effective reproduction number.

Data science: Data transformation and visualization. You can review the episode on Aggregate and visualize incidence data.

Reminder: the Effective Reproduction Number, \(R_t\)

The basic reproduction number, \(R_0\), is the average number of cases caused by one infectious individual in an entirely susceptible population.

But in an ongoing outbreak, the population does not remain entirely susceptible as those that recover from infection are typically immune. Moreover, there can be changes in behaviour or other factors that affect transmission. When we are interested in monitoring changes in transmission we are therefore more interested in the value of the effective reproduction number, \(R_t\), which represents the average number of cases caused by one infectious individual in the population at time \(t\), given the current state of the population (including immunity levels and control measures).

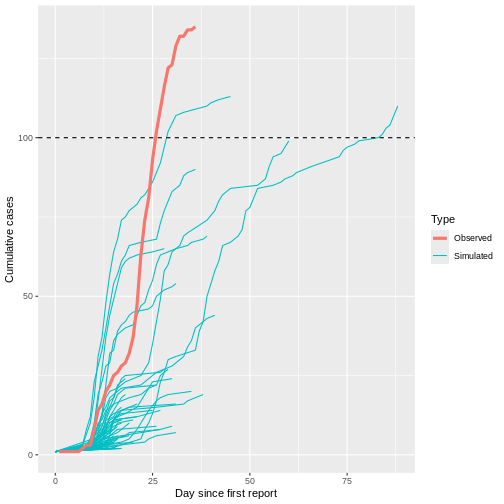

Introduction

The transmission intensity of an outbreak is quantified using two key metrics: the reproduction number, which informs on the strength of the transmission by indicating how many new cases are expected from each existing case; and the growth rate, which informs on the speed of the transmission by indicating how rapidly the outbreak is spreading or declining (doubling/halving time) within a population. For more details on the distinction between speed and strength of transmission and implications for control, review Dushoff & Park, 2021.

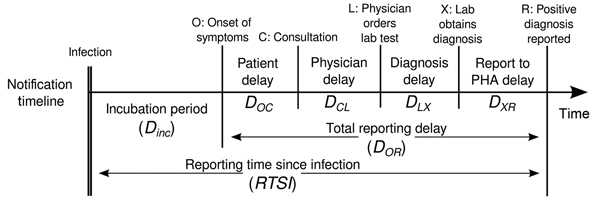

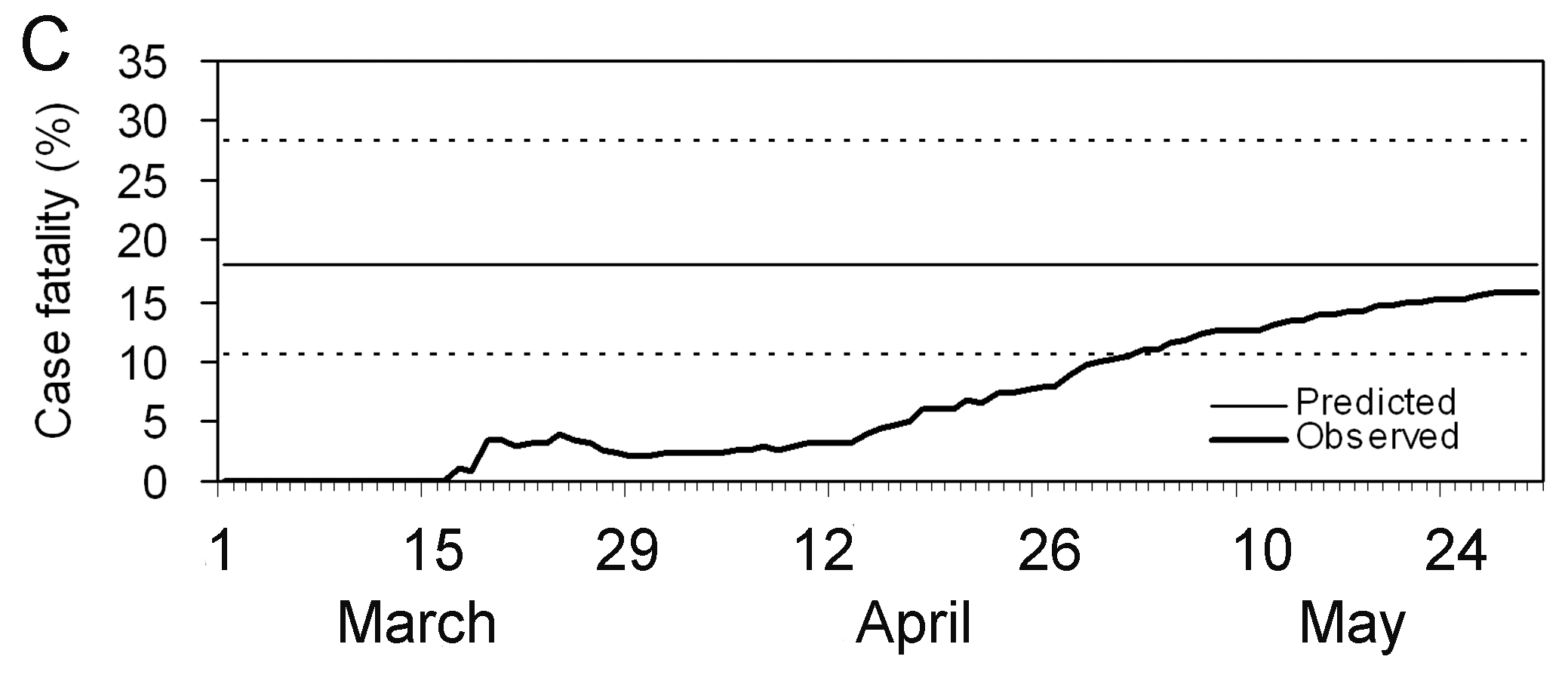

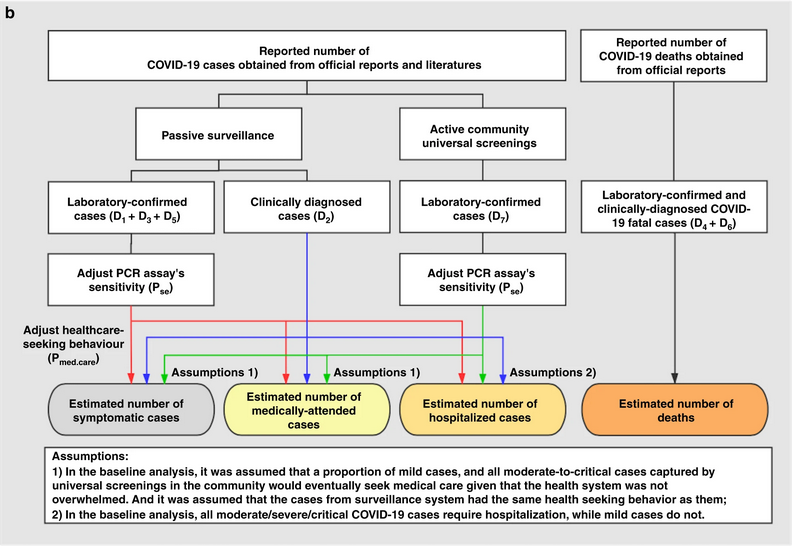

To estimate these key metrics using case data we must account for delays between the date of infections and date of reported cases. In an outbreak situation, data are usually available on reported dates only, therefore we must use estimation methods to account for these delays when trying to understand changes in transmission over time.

In the next tutorials we will focus on how to use the functions in EpiNow2 to estimate transmission metrics of case data. We will not cover the theoretical background of the models or inference framework, for details on these concepts see the vignette.

In this tutorial we are going to learn how to use the

EpiNow2 package to estimate the time-varying reproduction

number. We’ll get input data from incidence2. We’ll use

the tidyr and dplyr packages to arrange

some of its outputs, ggplot2 to visualize case

distribution, and the pipe %>% to connect some of their

functions, so let’s also call to the tidyverse

package:

R

library(EpiNow2)

library(incidence2)

library(tidyverse)

The double-colon

The double-colon :: in R lets you call a specific

function from a package without loading the entire package into the

current environment.

For example, dplyr::filter(data, condition) uses

filter() from the dplyr package.

This helps us remember package functions and avoid namespace conflicts.

Bayesian inference

The R package EpiNow2 uses a Bayesian inference framework to

estimate reproduction numbers and infection times based on reporting

dates. In other words, it estimates transmission based on when people

were actually infected (rather than symptom onset), by accounting for

delays in observed data. In contrast, the EpiEstim

package allows faster and simpler real-time estimation of the

reproduction number using only case data over time, reflecting how

transmission changes based on when symptoms appear.

In Bayesian inference, we use prior knowledge (prior distributions) with data (in a likelihood function) to find the posterior probability:

\(Posterior \, probability \propto likelihood \times prior \, probability\)

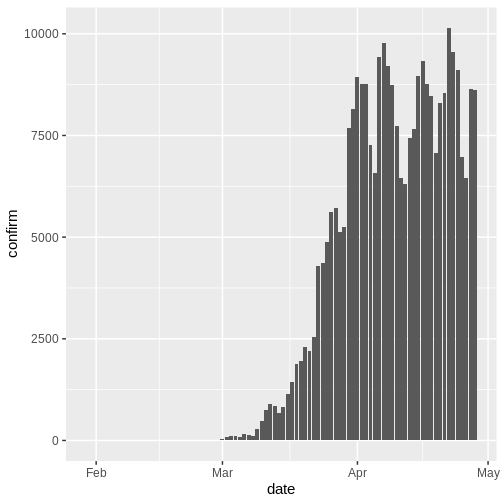

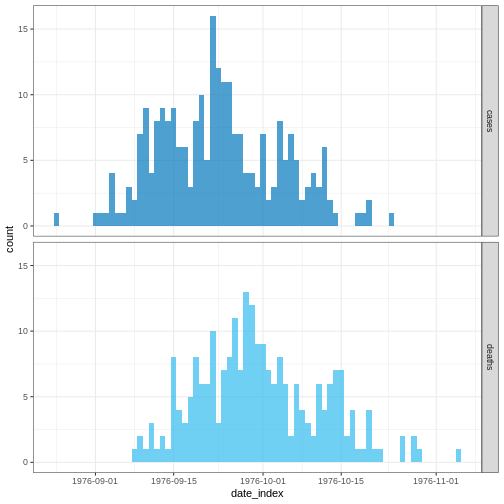

Delay distributions and case data

Case data

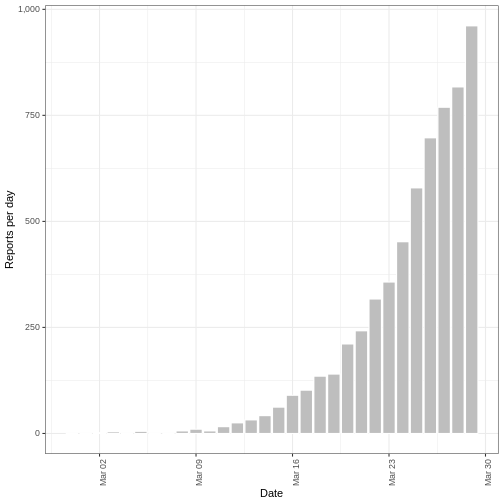

To illustrate the functions of EpiNow2 we will use

outbreak data of the start of the COVID-19 pandemic from the United

Kingdom, but only with the first 90 days observed. The data are

available in the R package incidence2.

R

dplyr::as_tibble(incidence2::covidregionaldataUK)

OUTPUT

# A tibble: 6,370 × 13

date region region_code cases_new cases_total deaths_new deaths_total

<date> <chr> <chr> <dbl> <dbl> <dbl> <dbl>

1 2020-01-30 East Mi… E12000004 NA NA NA NA

2 2020-01-30 East of… E12000006 NA NA NA NA

3 2020-01-30 England E92000001 2 2 NA NA

4 2020-01-30 London E12000007 NA NA NA NA

5 2020-01-30 North E… E12000001 NA NA NA NA

6 2020-01-30 North W… E12000002 NA NA NA NA

7 2020-01-30 Norther… N92000002 NA NA NA NA

8 2020-01-30 Scotland S92000003 NA NA NA NA

9 2020-01-30 South E… E12000008 NA NA NA NA

10 2020-01-30 South W… E12000009 NA NA NA NA

# ℹ 6,360 more rows

# ℹ 6 more variables: recovered_new <dbl>, recovered_total <dbl>,

# hosp_new <dbl>, hosp_total <dbl>, tested_new <dbl>, tested_total <dbl>To use the data, we must format the data to have two columns:

-

date: the date (as a date object see?is.Date()), -

confirm: number of disease reports (confirm) on that date.

Let’s use tidyr and incidence2 for this:

R

cases_sliced <- incidence2::covidregionaldataUK %>%

tibble::as_tibble() %>%

# Preprocess missing values

tidyr::replace_na(base::list(cases_new = 0)) %>%

# Compute the daily incidence

incidence2::incidence(

date_index = "date",

counts = "cases_new",

count_values_to = "confirm",

date_names_to = "date",

complete_dates = TRUE

) %>%

# Keep the first 90 dates

dplyr::slice_head(n = 90)

With incidence2::incidence() we aggregate cases in

different time intervals (i.e., days, weeks or months) or per

group categories. Also we can have complete dates for all the

range of dates per group category using

complete_dates = TRUE Explore later the incidence2::incidence()

reference manual

Can we replicate {incidence2} with {dplyr}?

We can get an object similar to cases from the

incidence2::covidregionaldataUK data frame using the

dplyr package.

R

incidence2::covidregionaldataUK %>%

dplyr::select(date, cases_new) %>%

dplyr::group_by(date) %>%

dplyr::summarise(confirm = sum(cases_new, na.rm = TRUE)) %>%

dplyr::ungroup() %>%

dplyr::slice_head(n = 90)

However, the incidence2::incidence() function contains

convenient arguments like complete_dates that facilitate

getting an incidence object with the same range of dates for each

grouping without the need of extra code lines or a time-series

package.

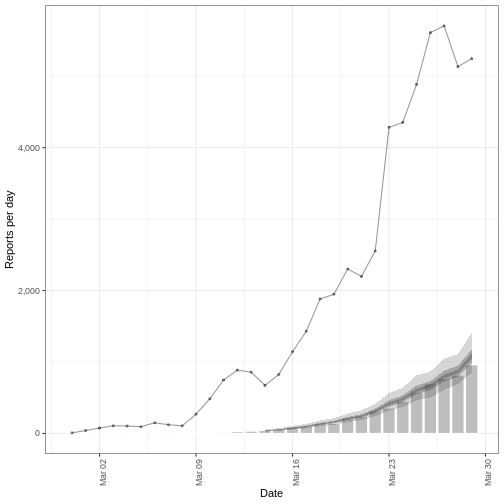

In an outbreak situation it is likely we would only have access to the beginning of the input data set. Therefore we assume we only have the first 90 days of this data.

R

plot(cases_sliced)

To pass the outputs from incidence2 to

EpiNow2 we need to drop one column from the

cases_sliced object:

R

# Drop column for {EpiNow2} input format

cases <- cases_sliced %>%

dplyr::select(-count_variable)

cases

OUTPUT

# A tibble: 90 × 2

date confirm

<date> <dbl>

1 2020-01-30 3

2 2020-01-31 0

3 2020-02-01 0

4 2020-02-02 0

5 2020-02-03 0

6 2020-02-04 0

7 2020-02-05 2

8 2020-02-06 0

9 2020-02-07 0

10 2020-02-08 8

# ℹ 80 more rowsDelay distributions

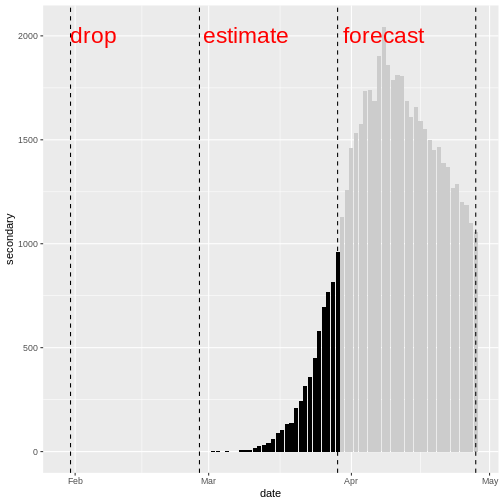

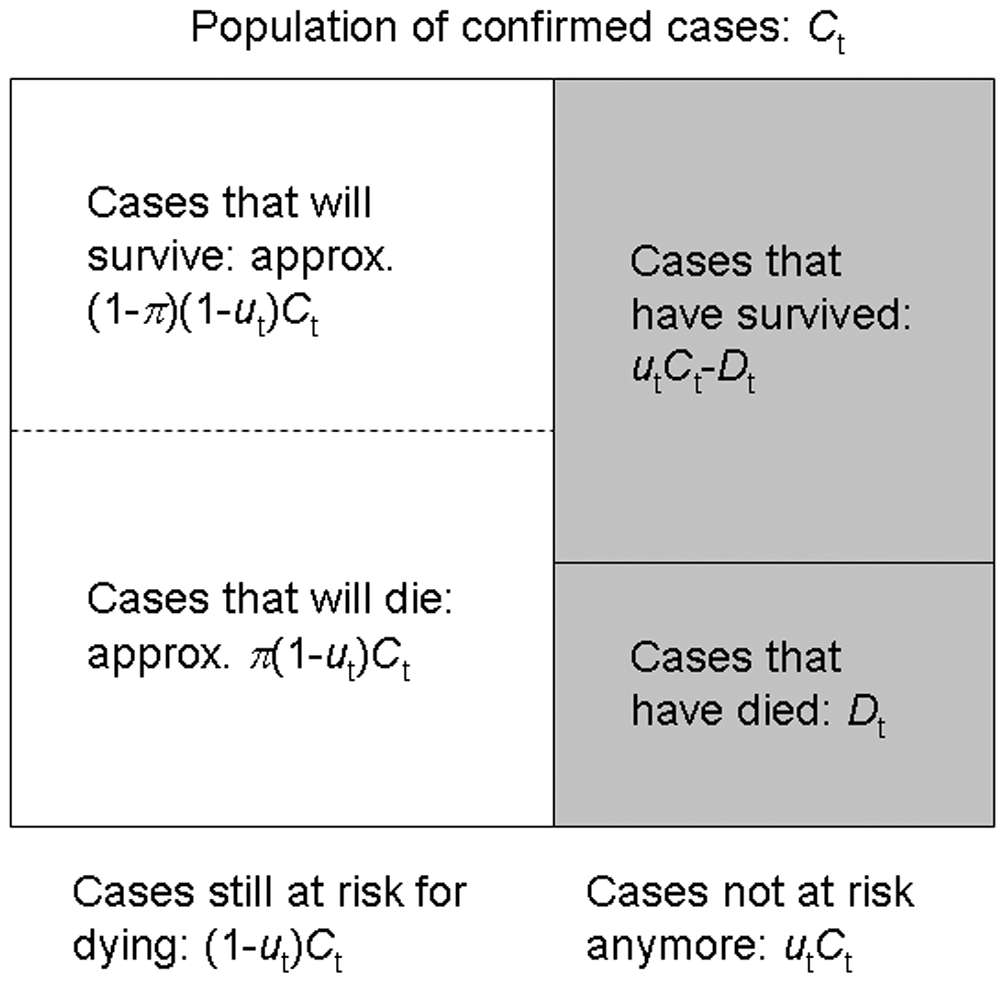

We assume there are delays from the time of infection until the time a case is reported. We specify these delays as distributions to account for the uncertainty in individual level differences. The delay may involve multiple types of processes. A typical delay from time of infection to case reporting may consist of:

time from infection to symptom onset (the incubation period) + time from symptom onset to case notification (the reporting time) .

The delay distribution for each of these processes can either estimated from data or obtained from the literature. We can express uncertainty about what the correct parameters of the distributions by assuming the distributions have fixed parameters or whether they have variable parameters. To understand the difference between fixed and variable distributions, let’s consider the incubation period.

Delays and data

The number of delays and type of delay are a flexible input that depend on the data. The examples below highlight how the delays can be specified for different data sources:

| Data source | Delay(s) |

|---|---|

| Time of symptom onset | Incubation period |

| Time of case report | Incubation period + time from symptom onset to case notification |

| Time of hospitalisation | Incubation period + time from symptom onset to hospitalisation |

Incubation period distribution

The distribution of incubation period for many diseases can usually be obtained from the literature. The package epiparameter contains a library of epidemiological parameters for different diseases obtained from the literature.

We specify a (fixed) gamma distribution with mean \(\mu = 4\) and standard deviation \(\sigma = 2\) (shape = \(4\), scale = \(1\)) using the function

Gamma() as follows:

R

incubation_period_fixed <- EpiNow2::Gamma(

mean = 4,

sd = 2,

max = 20

)

incubation_period_fixed

OUTPUT

- gamma distribution (max: 20):

shape:

4

rate:

1The argument max is the maximum value the distribution

can take; in this example, 20 days.

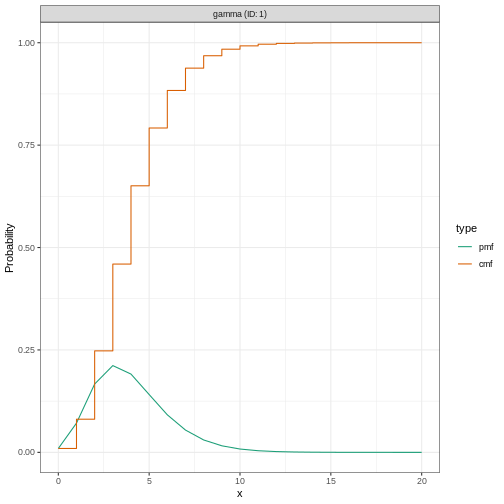

We can plot distributions generated by EpiNow2 using

plot():

R

plot(incubation_period_fixed)

Why a gamma distribution?

The incubation period must be a positive value. Therefore we must specify a distribution in EpiNow2 which is for positive values only.

Gamma() supports Gamma distributions and

LogNormal() Log-normal distributions, which are

distributions for positive values only.

For all types of delay, we will need to use distributions for positive values only - we don’t want to include delays of negative days in our analysis!

Including distribution uncertainty

To specify a variable distribution, we include uncertainty around the mean \(\mu\) and standard deviation \(\sigma\) of our gamma distribution. If our incubation period distribution has a mean \(\mu\) and standard deviation \(\sigma\), then we assume the mean (\(\mu\)) follows a Normal distribution with standard deviation \(\sigma_{\mu}\):

\[\mbox{Normal}(\mu,\sigma_{\mu}^2)\]

and a standard deviation (\(\sigma\)) follows a Normal distribution with standard deviation \(\sigma_{\sigma}\):

\[\mbox{Normal}(\sigma,\sigma_{\sigma}^2).\]

We specify this using Normal() for each argument: the

mean (\(\mu = 4\) with \(\sigma_{\mu} = 0.5\)) and standard

deviation (\(\sigma = 2\) with \(\sigma_{\sigma} = 0.5\)).

R

incubation_period_variable <- EpiNow2::Gamma(

mean = EpiNow2::Normal(mean = 4, sd = 0.5),

sd = EpiNow2::Normal(mean = 2, sd = 0.5),

max = 20

)

incubation_period_variable

OUTPUT

- gamma distribution (max: 20):

shape:

- normal distribution:

mean:

4

sd:

0.61

rate:

- normal distribution:

mean:

1

sd:

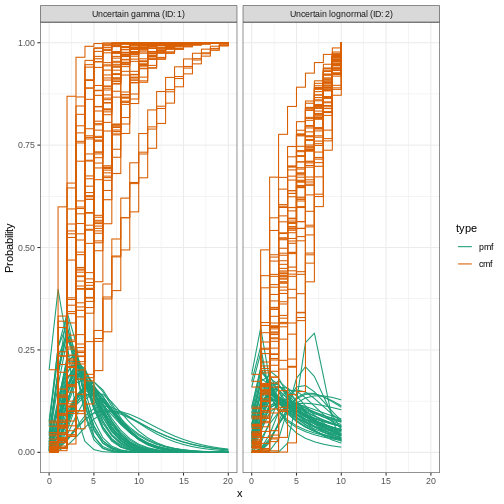

0.31Let’s plot the distribution we just configured:

R

plot(incubation_period_variable)

Reporting delays

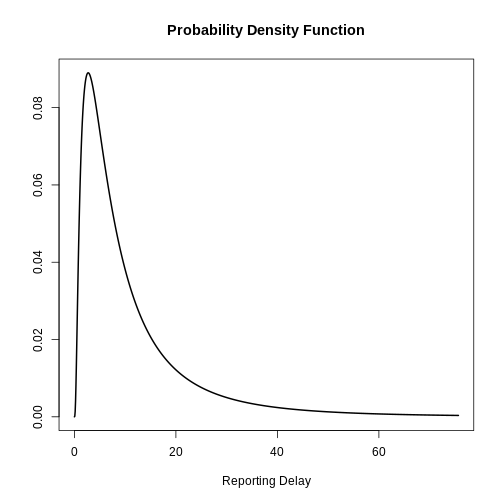

After the incubation period, there will be an additional delay of time from symptom onset to case notification: the reporting delay. We can specify this as a fixed or variable distribution, or estimate a distribution from data.

When specifying a distribution, it is useful to visualise the probability density to see the peak and spread of the distribution, in this case we will use a log normal distribution.

If we want to assume that the mean reporting delay is 2 days (with a

uncertainty of 0.5 days) and a standard deviation of 1 day (with

uncertainty of 0.5 days), we can specify a variable distribution using

LogNormal() as before:

R

reporting_delay_variable <- EpiNow2::LogNormal(

meanlog = EpiNow2::Normal(mean = 2, sd = 0.5),

sdlog = EpiNow2::Normal(mean = 1, sd = 0.5),

max = 10

)

Visualize a log Normal distribution using {epiparameter}

Using epiparameter::epiparameter() we can create a

custom distribution. The fixed log normal distribution will look

like:

R

library(epiparameter)

R

epiparameter::epiparameter(

disease = "covid",

epi_name = "reporting delay",

prob_distribution =

epiparameter::create_prob_distribution(

prob_distribution = "lnorm",

prob_distribution_params = c(

meanlog = 2,

sdlog = 1

)

)

) %>%

plot()

How to get the reporting delay from data?

If data is available on the time between symptom onset and reporting,

we can use the function EpiNow2::estimate_delay() to

estimate a log normal distribution from a vector of delays.

The code below illustrates how to use

EpiNow2::estimate_delay() with synthetic Ebola data. We

calculate the reporting delay for each case the time difference

from date of onset to date of

hospitalization (date when case was reported).

R

library(tidyverse)

# Steps:

# - get Ebola data from package {outbreaks}

# - keep a subset of columns for this example only

# - calculate the time difference between two dates in linelist

# - extract the time difference as a vector class object

# - estimate the delay parameters using {EpiNow2}

outbreaks::ebola_sim_clean$linelist %>%

tibble::as_tibble() %>%

dplyr::select(case_id, date_of_onset, date_of_hospitalisation) %>%

dplyr::mutate(reporting_delay = date_of_hospitalisation - date_of_onset) %>%

dplyr::pull(reporting_delay) %>%

EpiNow2::estimate_delay(

samples = 1000,

bootstraps = 10

)

OUTPUT

- lognormal distribution (max: 22):

meanlog:

- normal distribution:

mean:

0.18

sd:

0.14

sdlog:

- normal distribution:

mean:

1

sd:

0.11Generation time

We also must specify a distribution for the generation time. Here we will use a log normal distribution with mean 3.6 and standard deviation 3.1 (Ganyani et al. 2020).

R

generation_time_variable <- EpiNow2::LogNormal(

mean = EpiNow2::Normal(mean = 3.6, sd = 0.5),

sd = EpiNow2::Normal(mean = 3.1, sd = 0.5),

max = 20

)

Finding estimates

The function epinow() is a wrapper for the function

estimate_infections() used to estimate cases by date of

infection. The generation time distribution and delay distributions must

be passed using the functions generation_time_opts() and

delay_opts() respectively.

There are numerous other inputs that can be passed to

epinow(), see ?EpiNow2::epinow() for more

detail. One optional input is to specify a log normal prior for

the effective reproduction number \(R_t\) at the start of the outbreak. We

specify a mean of 2 days and standard deviation of 2 days as arguments

of prior within rt_opts():

R

# define Rt prior distribution

rt_prior <- EpiNow2::rt_opts(prior = EpiNow2::LogNormal(mean = 2, sd = 2))

Bayesian inference using Stan

The Bayesian inference is performed using MCMC methods with the

program Stan. There are a number of

default inputs to the Stan functions including the number of chains and

number of samples per chain (see

?EpiNow2::stan_opts()).

To reduce computation time, we can run chains in parallel. To do

this, we must set the number of cores to be used. By default, 4 MCMC

chains are run (see stan_opts()$chains), so we can set an

equal number of cores to be used in parallel as follows:

R

withr::local_options(base::list(mc.cores = 4))

To find the maximum number of available cores on your machine, use

parallel::detectCores().

Checklist

Note: In the code below _fixed

distributions are used instead of _variable (delay

distributions with uncertainty). This is to speed up computation time.

It is generally recommended to use variable distributions that account

for additional uncertainty.

R

# fixed alternatives

generation_time_fixed <- EpiNow2::LogNormal(

mean = 3.6,

sd = 3.1,

max = 20

)

reporting_delay_fixed <- EpiNow2::LogNormal(

mean = 2,

sd = 1,

max = 10

)

Now you are ready to run EpiNow2::epinow() to estimate

the time-varying reproduction number for the first 90 days:

R

estimates <- EpiNow2::epinow(

# reported cases

data = cases,

# delays

generation_time = EpiNow2::generation_time_opts(generation_time_fixed),

delays = EpiNow2::delay_opts(incubation_period_fixed + reporting_delay_fixed),

# prior

rt = rt_prior

)

Do not wait for this to continue

For the purpose of this tutorial, we can optionally use

EpiNow2::stan_opts() to reduce computation time. We can

specify a fixed number of samples = 1000 and

chains = 2 to the stan argument of the

EpiNow2::epinow() function. We expect this to take

approximately 3 minutes.

R

# you can add the `stan` argument

EpiNow2::epinow(

...,

stan = EpiNow2::stan_opts(samples = 1000, chains = 3)

)

Remember: Using an appropriate number of samples and chains is crucial for ensuring convergence and obtaining reliable estimates in Bayesian computations using Stan. More accurate outputs come at the cost of speed.

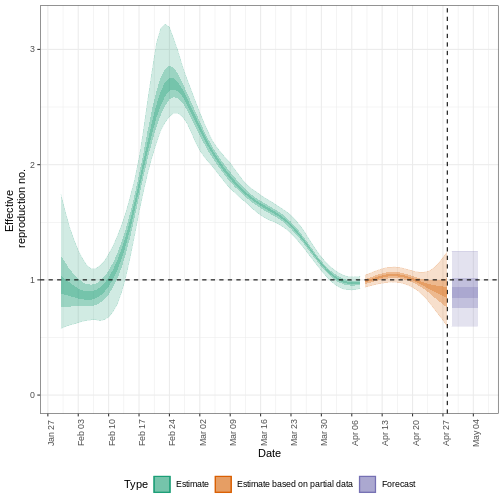

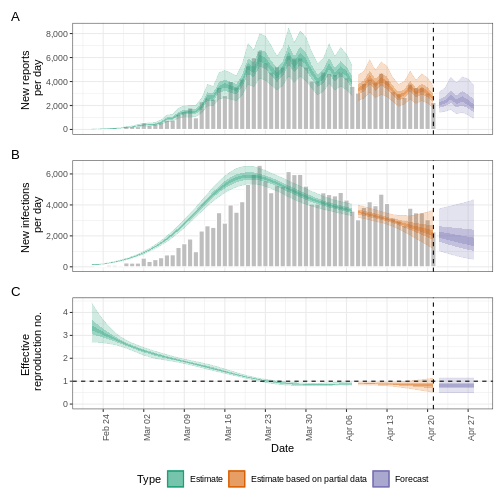

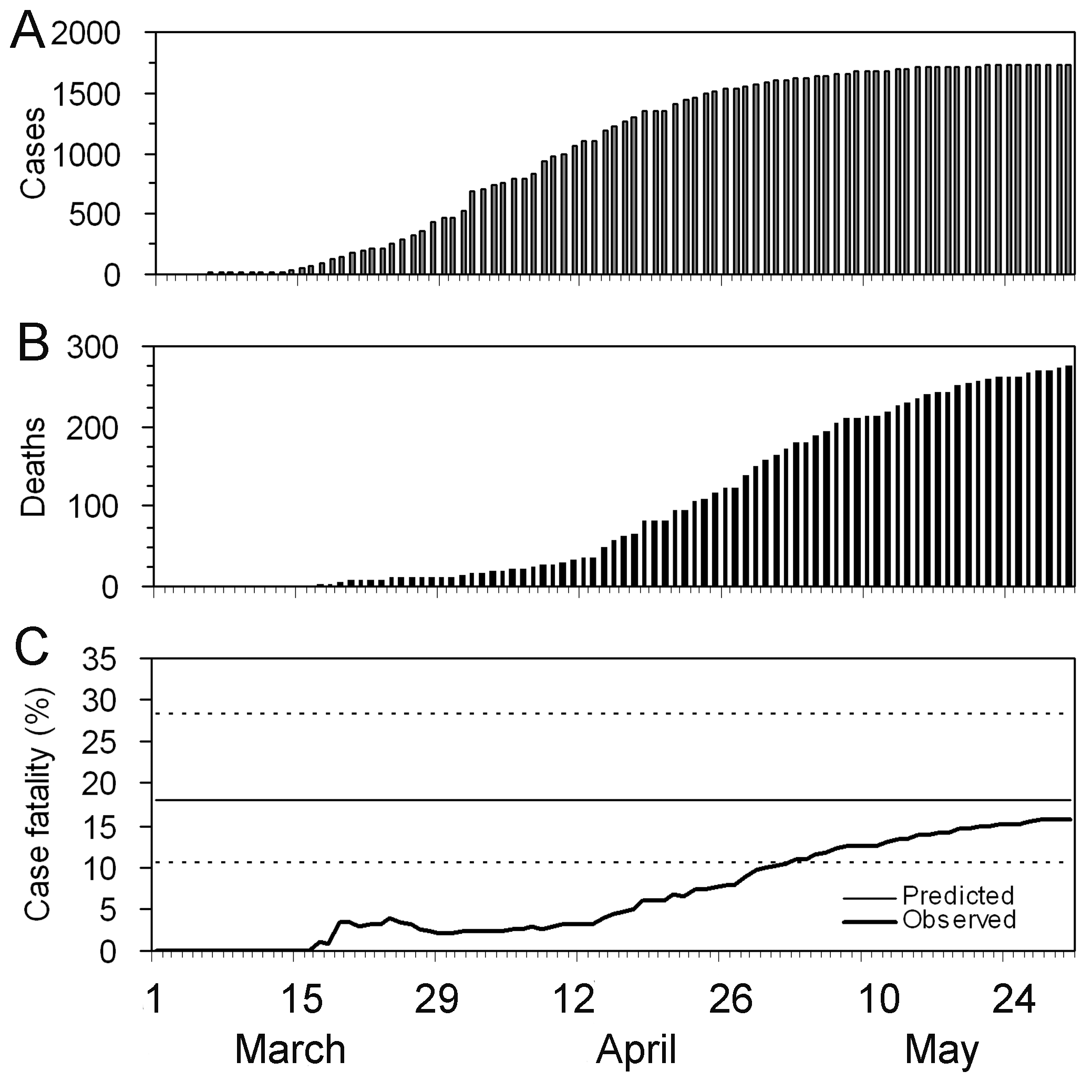

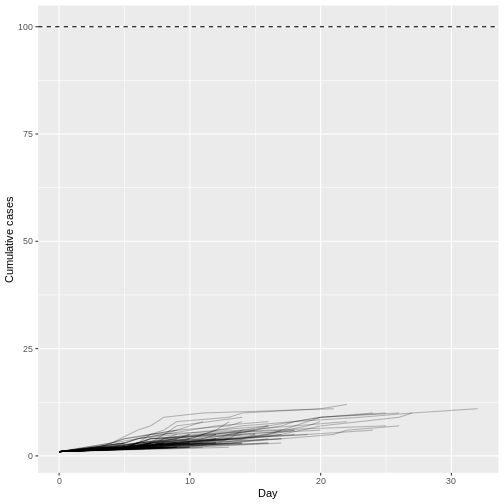

Results

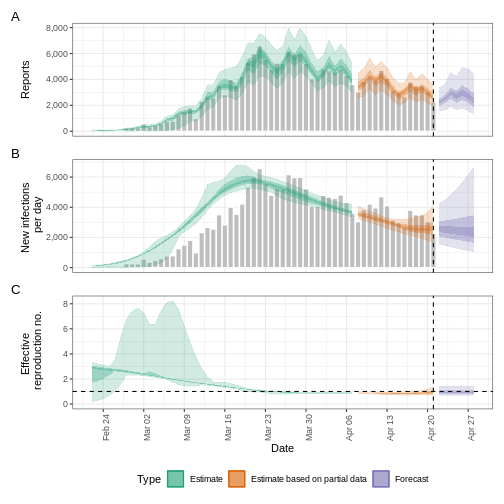

We can extract and visualise estimates of the effective reproduction number through time:

R

estimates$plots$R

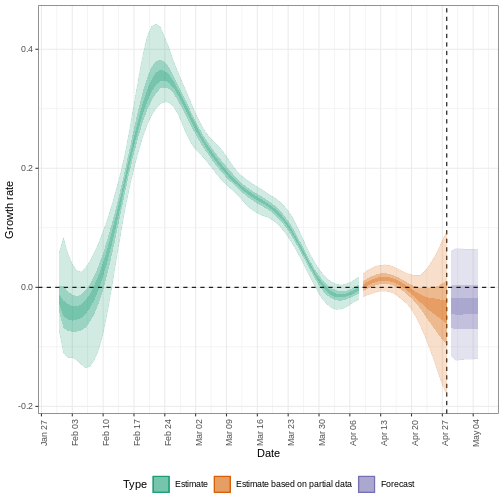

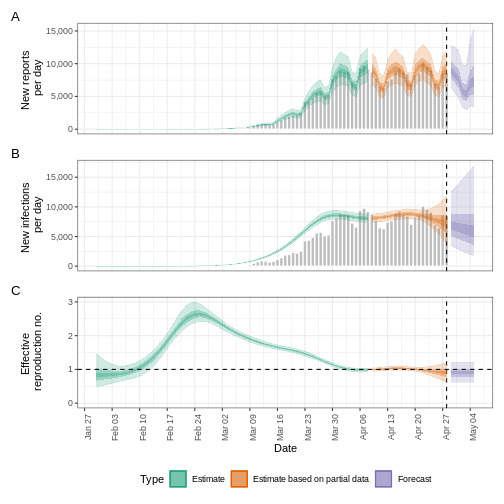

The uncertainty in the estimates increases through time. This is because estimates are informed by data in the past - within the delay periods. This difference in uncertainty is categorised into Estimate (green) utilises all data and Estimate based on partial data (orange) estimates that are based on less data (because infections that happened at the time are more likely to not have been observed yet) and therefore have increasingly wider intervals towards the date of the last data point. Finally, the Forecast (purple) is a projection ahead of time.

We can also visualise the growth rate estimate through time:

R

estimates$plots$growth_rate

To extract a summary of the key transmission metrics at the latest date in the data:

R

summary(estimates)

OUTPUT

measure estimate

<char> <char>

1: New infections per day 7904 (4778 -- 13261)

2: Expected change in reports Likely decreasing

3: Effective reproduction no. 0.96 (0.74 -- 1.3)

4: Rate of growth -0.013 (-0.1 -- 0.084)

5: Doubling/halving time (days) -55 (8.2 -- -6.6)As these estimates are based on partial data, they have a wide uncertainty interval.

From the summary of our analysis we see that the expected change in reports is Likely decreasing with the estimated new infections 7904 (4778 – 13261).

The effective reproduction number \(R_t\) estimate (on the last date of the data) is 0.96 (0.74 – 1.3).

The exponential growth rate of case numbers is -0.013 (-0.1 – 0.084).

The doubling time (the time taken for case numbers to double) is -55 (8.2 – -6.6).

Expected change in reports

A factor describing the expected change in reports based on the posterior probability that \(R_t < 1\).

| Probability (\(p\)) | Expected change |

|---|---|

| \(p < 0.05\) | Increasing |

| \(0.05 \leq p< 0.4\) | Likely increasing |

| \(0.4 \leq p< 0.6\) | Stable |

| \(0.6 \leq p < 0.95\) | Likely decreasing |

| \(0.95 \leq p \leq 1\) | Decreasing |

Credible intervals

In all EpiNow2 output figures, shaded regions reflect 90%, 50%, and 20% credible intervals in order from lightest to darkest.

Checklist

EpiNow2 can be used to estimate transmission metrics

from case data at any time in the course of an outbreak. The reliability

of these estimates depends on the quality of the data and appropriate

choice of delay distributions. In the next tutorial we will learn how to

make forecasts and investigate some of the additional inference options

available in EpiNow2.

How does {EpiNow2} work?

{EpiNow2} contains different models for how infections arise. One of the main models that is used in the default configuration is the Renewal equation model. If you want to gain familiarity with it and learn how delays are accounted for when estimating \(R_t\), you can read the episode on Introduction to the Renewal equation in the “More Resources” section of this tutorial’s website.

Challenge

Challenge

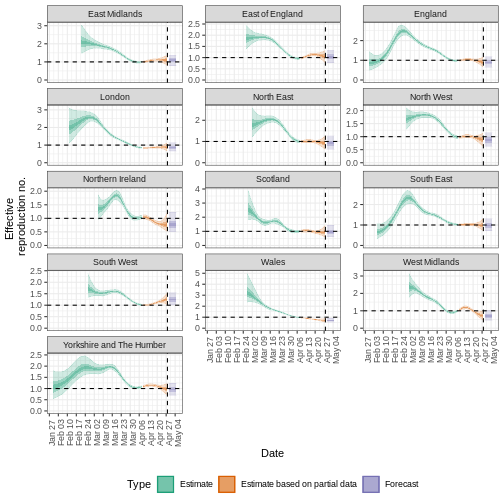

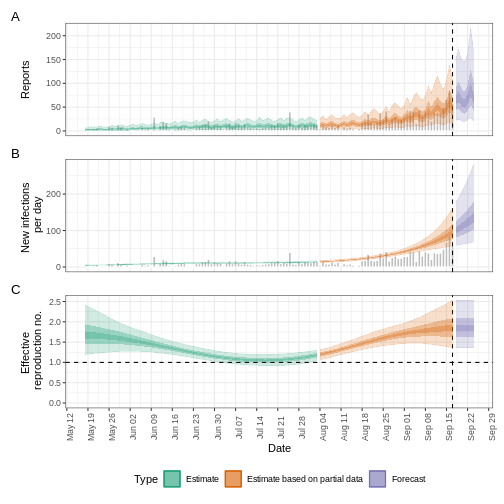

Quantify geographical heterogeneity

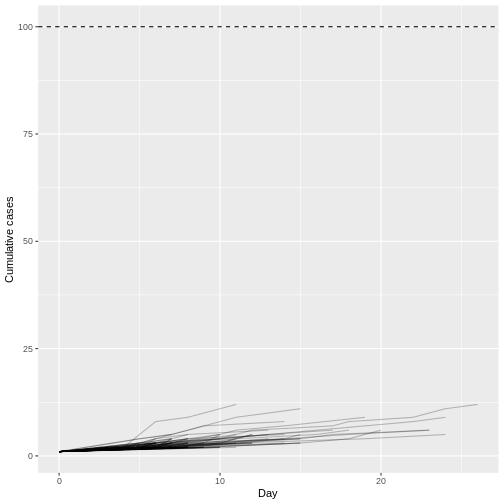

The outbreak data of the start of the COVID-19 pandemic from the United Kingdom from the R package incidence2 includes the region in which the cases were recorded. To find regional estimates of the effective reproduction number and cases, we must format the data to have three columns:

-

date: the date, -

region: the region, -

confirm: number of disease reports (confirm) for a region on a given date.

Generate regional Rt estimates from the

incidence2::covidregionaldataUK data frame by:

- use incidence2 to convert aggregated data to

incidence data by the variable

region, - keep the first 90 dates for all regions,

- estimate the Rt per region using the defined generation time and delays in this episode.

R

regional_cases <- incidence2::covidregionaldataUK %>%

# use {tidyr} to preprocess missing values

tidyr::replace_na(base::list(cases_new = 0))

To wrangle data, you can:

R

regional_cases <- incidence2::covidregionaldataUK %>%

# use {tidyr} to preprocess missing values

tidyr::replace_na(base::list(cases_new = 0)) %>%

# use {incidence2} to convert aggregated data to incidence data

incidence2::incidence(

date_index = "date",

groups = "region",

counts = "cases_new",

count_values_to = "confirm",

date_names_to = "date",

complete_dates = TRUE

) %>%

dplyr::select(-count_variable) %>%

dplyr::filter(date < ymd(20200301))

To learn how to do the regional estimation of Rt, read the Get

started vignette section on regional_epinow() at https://epiforecasts.io/EpiNow2/articles/EpiNow2.html#regional_epinow

To find regional estimates, we use the same inputs as

epinow() to the function

regional_epinow():

R

estimates_regional <- EpiNow2::regional_epinow(

# cases

data = regional_cases,

# delays

generation_time = EpiNow2::generation_time_opts(generation_time_fixed),

delays = EpiNow2::delay_opts(incubation_period_fixed + reporting_delay_fixed),

# prior

rt = rt_prior

)

Plot the results with:

R

estimates_regional$summary$summarised_results$table

estimates_regional$summary$plots$R

Key Points

- Transmission metrics can be estimated from case data after accounting for delays

- Uncertainty can be accounted for in delay distributions

Content from Use delay distributions in analysis

Last updated on 2026-02-09 | Edit this page

Overview

Questions

- How to reuse delays stored in the epiparameter library with my existing analysis pipeline?

Objectives

- Use distribution functions to continuous and discrete distributions

stored as

<epiparameter>objects. - Convert a continuous to a discrete distribution with epiparameter.

- Connect epiparameter outputs with EpiNow2 inputs.

Prerequisites

- Complete tutorial Access epidemiological delay distributions

- Complete tutorial Quantifying transmission

This episode requires you to be familiar with:

Data science : Basic programming with R.

Statistics : Probability distributions.

Epidemic theory : Epidemiological parameters, time periods, Effective reproductive number.

Introduction

This episode will integrate the content of the two previous episodes.

Let’s start by loading the epiparameter and

EpiNow2 package. We’ll use the pipe %>%,

some dplyr verbs and ggplot2, so let’s

also call to the tidyverse package:

R

library(epiparameter)

library(EpiNow2)

library(tidyverse)

To recap, we learned that epiparameter help us to choose one specific set of epidemiological parameters from the literature, instead of copy/pasting them by hand:

R

covid_serialint <-

epiparameter::epiparameter_db(

disease = "covid",

epi_name = "serial",

single_epiparameter = TRUE

)

Now, we have an epidemiological parameter that we can use in our

analysis! However, we can’t use this object directly for

analysis. For example, to quantify transmission, we often use the serial

interval distribution as an approximation of the generation time. To do

this we need to apply additional functions to an

<epiparameter> object to extract its summary

statistics or distribution parameters. These

outputs can then be used as inputs for EpiNow2::LogNormal()

or EpiNow2::Gamma(), just as we did in the previous

episode.

In this episode, we will use the distribution

functions that epiparameter provides to get

descriptive values like the median, maximum value (max),

percentiles, or quantiles. This set of functions can operate on

any distribution that can be included in an

<epiparameter> object: Gamma, Weibull, Lognormal,

Negative Binomial, Geometric, and Poisson, which are mostly used in

outbreak analytics.

You’ll need these outputs in the next episodes to power your analysis pipelines — so let’s make sure you’re comfortable working with them before moving on!

The double-colon

The double-colon :: in R lets you call a specific

function from a package without loading the entire package into the

current environment.

For example, dplyr::filter(data, condition) uses

filter() from the dplyr package.

This helps us remember package functions and avoid namespace conflicts by explicitly specifying which package’s function to use when multiple packages have functions with the same name.

Distribution functions

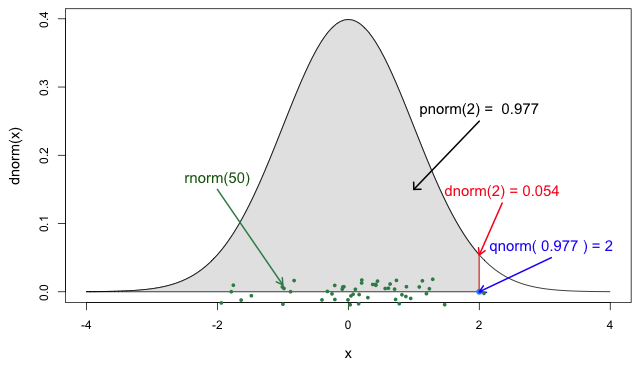

In R, all the statistical distributions have functions to access the following:

-

density(): Probability Density function (PDF), -

cdf(): Cumulative Distribution function (CDF), -

quantile(): Quantile function, and -

generate(): Random values from the given distribution.

Functions for the Normal distribution

If you need it, read in detail about the R probability functions for the normal distribution, each of its definitions and identify in which part of a distribution they are located!

If you look at ?stats::Distributions, each type of

distribution has a unique set of functions. However,

epiparameter gives you the same four functions to access

each of the values above for any <epiparameter>

object you want!

R

# plot this to have a visual reference

# continuous distribution

plot(covid_serialint, xlim = c(0, 20))

R

# the density value at quantile value of 10 (days)

density(covid_serialint, at = 10)

OUTPUT

[1] 0.01911607R

# the cumulative probability at quantile value of 10 (days)

epiparameter::cdf(covid_serialint, q = 10)

OUTPUT

[1] 0.9466605R

# the quantile value (day) at a cumulative probability of 60%

quantile(covid_serialint, p = 0.6)

OUTPUT

[1] 4.618906R

# generate 10 random values (days) given

# the distribution family and its parameters

epiparameter::generate(covid_serialint, times = 10)

OUTPUT

[1] 3.018201 5.644991 1.757889 3.559525 3.970370 8.912096 7.674003 3.095512

[9] 3.177513 9.160591Window for contact tracing and the serial interval

The serial interval is important in the optimisation of contact tracing since it provides a time window for the containment of a disease spread (Fine, 2003). Depending on the serial interval, we can evaluate the need to increase the number of days considered for contact tracing to include more backwards contacts (Davis et al., 2020).

With the COVID-19 serial interval (covid_serialint)

calculate:

- How much would expanding the contact tracing window from 2 to 6 days before symptom onset improve the detection of potential infectors?

The serial interval is the time between symptom onset in an infector and symptom onset in their infectee.

We can use the serial interval distribution to quantify the probability of detecting an infector from a new case. This probability can be calculated directly from the assumed distribution. Refer to Figure 2 in Davis et al., 2020.

For an object class <epiparameter>, the cumulative

probability can be computed using epiparameter::cdf().

R

plot(covid_serialint)

R

# calculate probability of finding backward cases

# with contact traicing window of 2 days

window_2 <- epiparameter::cdf(covid_serialint, q = 2)

window_2

OUTPUT

[1] 0.1111729R

# calculate probability of finding backward cases

# with contact traicing window of 6 days

window_6 <- epiparameter::cdf(covid_serialint, q = 6)

window_6

OUTPUT

[1] 0.7623645R

# calculate the difference

window_6 - window_2

OUTPUT

[1] 0.6511917Given the COVID-19 serial interval:

A contact tracing method considering contacts up to 2 days pre-onset will capture around 11.1% of backward cases.

If this period is extended to 6 days pre-onset, this could include 76.2% of backward contacts.

About 65.1% more backward cases could be captured when extending tracing from 2 to 6 days.

If we exchange the question between days and cumulative probability to:

- When considering secondary cases, how many days following the symptom onset of primary cases can we expect 55% of symptom onset to occur?

R

quantile(covid_serialint, p = 0.55)

An interpretation could be:

- The 55% percent of the symptom onset of secondary cases will happen after 4.2 days after the symptom onset of primary cases.

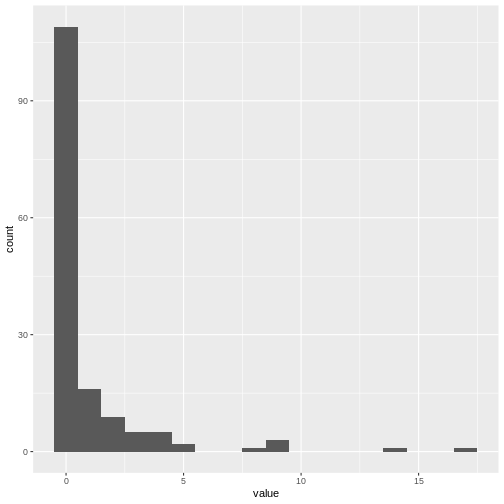

Discretise a continuous distribution

We are getting closer to the end! EpiNow2::LogNormal()

still needs a maximum value (max).

One way to do this is to get the quantile value for the

distribution’s 99th percentile or 0.99 cumulative

probability. For this, we need access to the set of distribution

functions for our <epiparameter> object.

We can use the set of distribution functions for a

continuous distribution (as above). However, these values will

be continuous numbers. We can discretise the

continuous distribution stored in our <epiparameter>

object to get discrete values from a continuous distribution.

When we epiparameter::discretise() the continuous

distribution we get a discrete distribution:

R

covid_serialint_discrete <-

epiparameter::discretise(covid_serialint)

covid_serialint_discrete

OUTPUT

Disease: COVID-19

Pathogen: SARS-CoV-2

Epi Parameter: serial interval

Study: Nishiura H, Linton N, Akhmetzhanov A (2020). "Serial interval of novel

coronavirus (COVID-19) infections." _International Journal of

Infectious Diseases_. doi:10.1016/j.ijid.2020.02.060

<https://doi.org/10.1016/j.ijid.2020.02.060>.

Distribution: discrete lnorm (days)

Parameters:

meanlog: 1.386

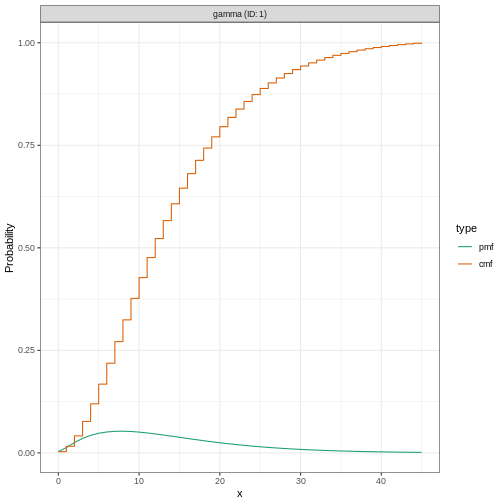

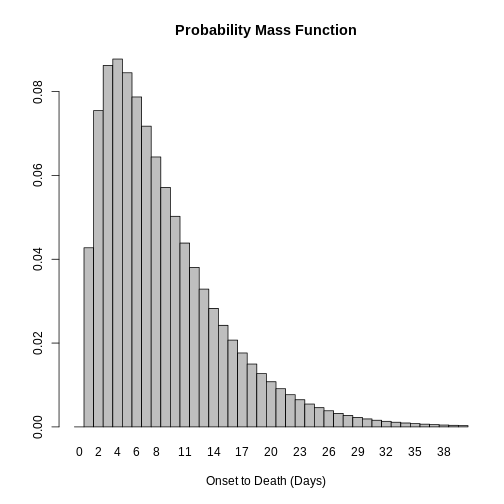

sdlog: 0.568We identify this change in the Distribution: output line

of the <epiparameter> object. Double check this

line:

Distribution: discrete lnorm (days)While for a continuous distribution, we plot the Probability Density Function (PDF), for a discrete distribution, we plot the Probability Mass Function (PMF):

R

# discrete distribution

plot(covid_serialint_discrete)

To finally get a max value, let’s access the quantile

value of the 99th percentile or 0.99 probability of the

distribution with the prob_dist$q notation, similarly to

how we access the summary_stats values.

R

covid_serialint_discrete_max <-

quantile(covid_serialint_discrete, p = 0.99)

Length of quarantine and incubation period

The incubation period distribution is a useful delay to assess the length of active monitoring or quarantine (Lauer et al., 2020). Similarly, delays from symptom onset to recovery (or death) will determine the required duration of health care and case isolation (Cori et al., 2017).

The library of parameters in the package epiparameter contains the parameters reported by Lauer et al., 2020.

Calculate:

- How many days after infection do 99% of people who will develop COVID-19 symptoms actually show symptoms? Get an integer as a response.

What delay distribution measures the time between infection and the onset of symptoms? For a refresher, you can read the tutorial Glossary.

The probability functions for <epiparameter>

discrete distributions are the same that we used for

the continuous ones!

R

# the delay from infection to onset is called incubation period

# access the incubation period for covid

covid_incubation <-

epiparameter::epiparameter_db(

disease = "covid",

epi_name = "incubation",

author = "lauer",

single_epiparameter = TRUE

)

covid_incubation

OUTPUT

Disease: COVID-19

Pathogen: SARS-CoV-2

Epi Parameter: incubation period

Study: Lauer S, Grantz K, Bi Q, Jones F, Zheng Q, Meredith H, Azman A, Reich

N, Lessler J (2020). "The Incubation Period of Coronavirus Disease 2019

(COVID-19) From Publicly Reported Confirmed Cases: Estimation and

Application." _Annals of Internal Medicine_. doi:10.7326/M20-0504

<https://doi.org/10.7326/M20-0504>.

Distribution: lnorm (days)

Parameters:

meanlog: 1.629

sdlog: 0.419R

# to get an integer as a response, discretize the distribution

covid_incubation_discrete <- epiparameter::discretise(covid_incubation)

covid_incubation_discrete

OUTPUT

Disease: COVID-19

Pathogen: SARS-CoV-2

Epi Parameter: incubation period

Study: Lauer S, Grantz K, Bi Q, Jones F, Zheng Q, Meredith H, Azman A, Reich

N, Lessler J (2020). "The Incubation Period of Coronavirus Disease 2019

(COVID-19) From Publicly Reported Confirmed Cases: Estimation and

Application." _Annals of Internal Medicine_. doi:10.7326/M20-0504

<https://doi.org/10.7326/M20-0504>.

Distribution: discrete lnorm (days)

Parameters:

meanlog: 1.629

sdlog: 0.419R

# calculate the quantile or value at the percertile 99th from the distribution

quantile(covid_incubation_discrete, p = 0.99)

OUTPUT

[1] 1399% of those who develop COVID-19 symptoms will do so within 13 days of infection.

Now, Is this result consistent with the duration of quarantine recommended in practice during the COVID-19 pandemic?

Plug-in {epiparameter} to {EpiNow2}

Now we can plug everything into the EpiNow2::LogNormal()

function!

- the summary statistics

meanandsdof the distribution, - a maximum value

max, - the

distributionname.

When using EpiNow2::LogNormal() to define a log

normal distribution like the one in the

covid_serialint object we can specify the mean and sd as

parameters. Alternatively, to get the “natural” parameters for a log

normal distribution we can convert its summary statistics to

distribution parameters named meanlog and

sdlog. With epiparameter we can directly get

the distribution parameters using

epiparameter::get_parameters():

R

covid_serialint_parameters <-

epiparameter::get_parameters(covid_serialint)

Then, we have:

R

serial_interval_covid <-

EpiNow2::LogNormal(

meanlog = covid_serialint_parameters["meanlog"],

sdlog = covid_serialint_parameters["sdlog"],

max = covid_serialint_discrete_max

)

serial_interval_covid

OUTPUT

- lognormal distribution (max: 14):

meanlog:

1.4

sdlog:

0.57LogNormal or Gamma?

Let’s say that you need to quantify the transmission of an Ebola outbreak. You will use the serial interval as an approximation of the generation time for EpiNow2. The epiparameter will give you access to the parameter estimated from historical outbreaks.

Follow these steps:

- Get access to the

serial intervaldistribution ofEbolausing epiparameter. - Access to one entry from the study with the highest sample size.

- Print the output and read the description.

- Identify which probability distribution (e.g., Gamma, Lognormal, etc.) was used by authors to model the delay.

- Extract the distribution parameters for the corresponding probability distribution.

- Calculate an exact maximum value for the delay distribution (e.g., the 99th percentile).

- Plug-in the distribution parameters from the

<epiparameter>to the corresponding probability distribution function in EpiNow2 (e.g.,EpiNow2::Gamma(),EpiNow2::LogNormal(), etc.).

When connecting epiparameter and EpiNow2, there is one step that is susceptible to mistakes.

In <epiparameter>, the output line

Distribution: determines the function to use from

EpiNow2:

We choose EpiNow2::Gamma() if in

<epiparameter> we get:

Distribution: gamma (days)

Parameters:

shape: 2.188

scale: 6.490We choose EpiNow2::LogNormal() if in

<epiparameter> we get:

Distribution: lnorm (days)

Parameters:

meanlog: 1.386

sdlog: 0.568R

# 1. access a serial interval

ebola_serialint <- epiparameter::epiparameter_db(

disease = "ebola",

epi_name = "serial",

single_epiparameter = TRUE

)

R

ebola_serialint

OUTPUT

Disease: Ebola Virus Disease

Pathogen: Ebola Virus

Epi Parameter: serial interval

Study: WHO Ebola Response Team, Agua-Agum J, Ariyarajah A, Aylward B, Blake I,

Brennan R, Cori A, Donnelly C, Dorigatti I, Dye C, Eckmanns T, Ferguson

N, Formenty P, Fraser C, Garcia E, Garske T, Hinsley W, Holmes D,

Hugonnet S, Iyengar S, Jombart T, Krishnan R, Meijers S, Mills H,

Mohamed Y, Nedjati-Gilani G, Newton E, Nouvellet P, Pelletier L,

Perkins D, Riley S, Sagrado M, Schnitzler J, Schumacher D, Shah A, Van

Kerkhove M, Varsaneux O, Kannangarage N (2015). "West African Ebola

Epidemic after One Year — Slowing but Not Yet under Control." _The New

England Journal of Medicine_. doi:10.1056/NEJMc1414992

<https://doi.org/10.1056/NEJMc1414992>.

Distribution: gamma (days)

Parameters:

shape: 2.188

scale: 6.490R

# 2. extract parameters from {epiparameter} object

ebola_serialint_params <- epiparameter::get_parameters(ebola_serialint)

ebola_serialint_params

OUTPUT

shape scale

2.187934 6.490141 R

# 3. get a maximum value

ebola_serialint_max <- ebola_serialint %>%

epiparameter::discretise() %>%

quantile(p = 0.99)

ebola_serialint_max

OUTPUT

[1] 45R

# 4. adapt {epiparameter} to {EpiNow2} distribution inferfase

ebola_generationtime <- EpiNow2::Gamma(

shape = ebola_serialint_params["shape"],

scale = ebola_serialint_params["scale"],

max = ebola_serialint_max

)

ebola_generationtime

OUTPUT

- gamma distribution (max: 45):

shape:

2.2

rate:

0.15R

# plot the `epiparameter` class object

plot(ebola_serialint)

R

# plot the `EpiNow2` class object

plot(ebola_generationtime)

Plotting distributions from the EpiNow2 interface

always gives a discretized output. From the legend: PMF

stants for Probability Mass Function and CMF stants for

Cummulative Mass Function.

Adjusting for reporting delays

Estimating \(R_t\) requires data on the daily number of new infections. Due to lags in the development of detectable viral loads, symptom onset, seeking care, and reporting, these numbers are not readily available. All observations reflect transmission events from some time in the past. In other words, if \(d\) is the delay from infection to observation, then observations at time \(t\) inform \(R_{t−d}\), not \(R_t\). (Gostic et al., 2020)

The delay distribution could be inferred jointly

with the underlying times of infection or estimated as the sum of the incubation period distribution and

the distribution of delays from symptom onset to observation from line

list data (reporting delay).

For EpiNow2, we can specify these two complementary delay

distributions in the delays argument.

Use an incubation period for COVID-19 to estimate Rt

Estimate the time-varying reproduction number for the first 60 days

of the example_confirmed data set from

EpiNow2. Access to an incubation period for COVID-19 from

epiparameter to use it as a reporting delay.

Use the last epinow() calculation using the

delays argument and the delay_opts() helper

function.

The delays argument and the delay_opts()

helper function are analogous to the generation_time

argument and the generation_time_opts() helper

function.

R

epinow_estimates <- EpiNow2::epinow(

# cases

reported_cases = example_confirmed[1:60],

# delays

generation_time = EpiNow2::generation_time_opts(covid_serial_interval),

delays = EpiNow2::delay_opts(covid_incubation_time)

)

R

# generation time ---------------------------------------------------------

# get covid serial interval

covid_serialint <-

epiparameter::epiparameter_db(

disease = "covid",

epi_name = "serial",

single_epiparameter = TRUE

)

# adapt epiparameter to epinow2

covid_serialint_discrete_max <- covid_serialint %>%

epiparameter::discretise() %>%

quantile(p = 0.99)

covid_serialint_parameters <-

epiparameter::get_parameters(covid_serialint)

covid_serial_interval <-

EpiNow2::LogNormal(

meanlog = covid_serialint_parameters["meanlog"],

sdlog = covid_serialint_parameters["sdlog"],

max = covid_serialint_discrete_max

)

# incubation time ---------------------------------------------------------

# get covid incubation period

covid_incubation <- epiparameter::epiparameter_db(

disease = "covid",

epi_name = "incubation",

single_epiparameter = TRUE

)

# adapt epiparameter to epinow2

covid_incubation_discrete_max <- covid_incubation %>%

epiparameter::discretise() %>%

quantile(p = 0.99)

covid_incubation_parameters <-

epiparameter::get_parameters(covid_incubation)

covid_incubation_time <-

EpiNow2::LogNormal(

meanlog = covid_incubation_parameters["meanlog"],

sdlog = covid_incubation_parameters["sdlog"],

max = covid_incubation_discrete_max

)

# epinow ------------------------------------------------------------------

# Set 4 cores to be used in parallel computations

withr::local_options(list(mc.cores = 4))

# run epinow

epinow_estimates_cgi <- EpiNow2::epinow(

# cases

data = example_confirmed[1:60],

# delays

generation_time = EpiNow2::generation_time_opts(covid_serial_interval),

delays = EpiNow2::delay_opts(covid_incubation_time)

)

base::plot(epinow_estimates_cgi)

Try to complement the delays argument with a reporting

delay like the reporting_delay_fixed object of the previous

episode.

How much has it changed?

Compare three runs of EpiNow2::epinow():

- Use only the generation time

- Add the incubation time

- Add the incubation time and reporting delay

After adding all delays, discuss:

- Does the trend of the model fit in the “Estimate” section change?

- Has the uncertainty changed?

- How would you explain or interpret any of these changes?

Compare all the EpiNow2 figures generated in the three runs.

Challenges

A code completion tip

If we write the [] next to the object

covid_serialint_parameters[], within [] we can

use the Tab key ↹ for code

completion feature

This gives quick access to

covid_serialint_parameters["meanlog"] and

covid_serialint_parameters["sdlog"].

We invite you to try this out in code chunks and the R console!

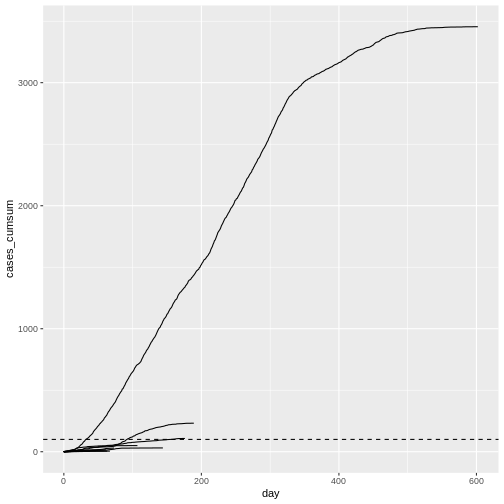

Ebola’s effective reproduction number adjusted by reporting delays

Download and read the Ebola dataset:

- Estimate the effective reproduction number using EpiNow2

- Adjust the estimate by the available reporting delays in epiparameter

- Why did you choose that parameter?

To calculate the \(R_t\) using EpiNow2, we need:

- Aggregated incidence

data, with confirmed cases per day, and - The

generationtime distribution. - Optionally, reporting

delaysdistributions when available (e.g., incubation period).

To get delay distribution using epiparameter we can use functions like:

epiparameter::epiparameter_db()epiparameter::parameter_tbl()discretise()quantile()

R

# read data

# e.g.: if path to file is data/raw-data/ebola_cases.csv then:

ebola_confirmed <-

read_csv(here::here("data", "raw-data", "ebola_cases.csv")) %>%

incidence2::incidence(

date_index = "date",

counts = "confirm",

count_values_to = "confirm",

date_names_to = "date",

complete_dates = TRUE

) %>%

dplyr::select(-count_variable)

# list distributions

epiparameter::epiparameter_db(disease = "ebola") %>%

epiparameter::parameter_tbl()

R

# generation time ---------------------------------------------------------

# subset one distribution for the generation time

ebola_serial <- epiparameter::epiparameter_db(

disease = "ebola",

epi_name = "serial",

single_epiparameter = TRUE

)

# adapt epiparameter to epinow2

ebola_serial_discrete <- epiparameter::discretise(ebola_serial)

serial_interval_ebola <-

EpiNow2::Gamma(

mean = ebola_serial$summary_stats$mean,

sd = ebola_serial$summary_stats$sd,

max = quantile(ebola_serial_discrete, p = 0.99)

)

# incubation time ---------------------------------------------------------

# subset one distribution for delay of the incubation period

ebola_incubation <- epiparameter::epiparameter_db(

disease = "ebola",

epi_name = "incubation",

single_epiparameter = TRUE

)

# adapt epiparameter to epinow2

ebola_incubation_discrete <- epiparameter::discretise(ebola_incubation)

incubation_period_ebola <-

EpiNow2::Gamma(

mean = ebola_incubation$summary_stats$mean,

sd = ebola_incubation$summary_stats$sd,

max = quantile(ebola_serial_discrete, p = 0.99)

)

# epinow ------------------------------------------------------------------

# run epinow

epinow_estimates_egi <- EpiNow2::epinow(

# cases

data = ebola_confirmed,

# delays

generation_time = EpiNow2::generation_time_opts(serial_interval_ebola),

delays = EpiNow2::delay_opts(incubation_period_ebola)